- Anthropic CEO Dario Amodei estimates a 25% chance that AI is leading to disaster

- He still believes that AI is worth investing in and that the benefits outweigh the risk

- His comments are suitable for growing public and political conversations about AI -risks and regulation

One-in-four odds may work pretty well in some circumstances. For example, it’s better odds than most casino games. However, it is apparently unlikely enough for anthropic CEO Dario Amodei to seem carefree after attaching the chance of artificial intelligence leading to a 25%community -ending disaster.

“I think there’s a 25% chance of things going really, really bad,” Amodei Blitely said at Axios AI + DC Summit when asked about his (P) Doom – probability of downfall – faith around AI. But he is more focused on “75% chance of things going really, really well.”

With “Really, Really Bad,” he doesn’t mean your phone autocorrorated “duck” for something worse. He believes scenarios big enough to threaten social systems, existential risks, poorly abused AI and ongoing results that can be disastrous.

. @Jimvandehei asks @anthropic CEO @darioamodei, what probability he would give AI end in disaster: “I think there’s a 25% chance that things are going really, really bad.” #Axiosaisummit pic.twitter.com/9d7eqldyncSeptember 17, 2025

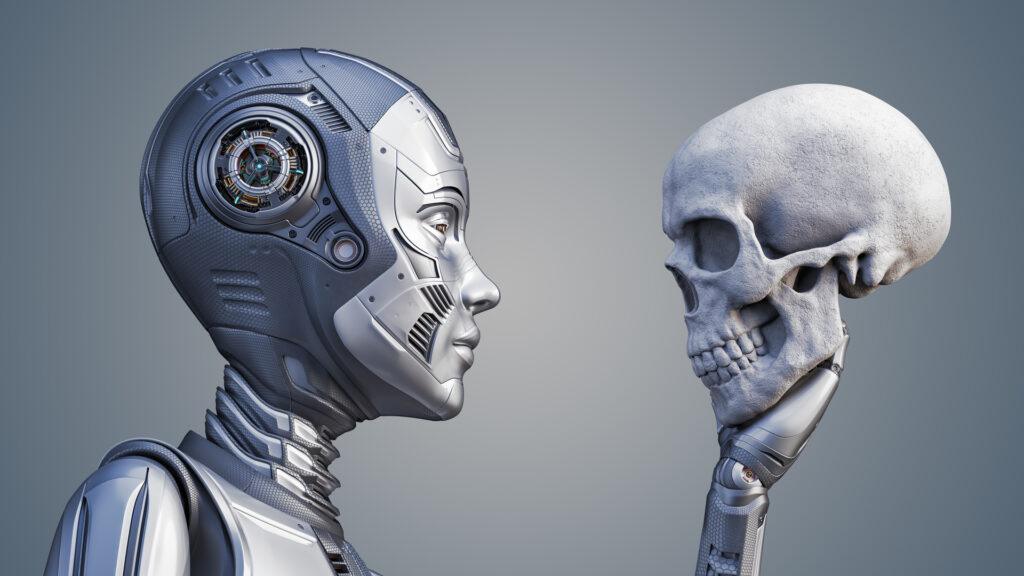

For an industry that is often woven in utopian promise or reduced to sci-fi fears, Amodei’s attitude towards both odds of an apocalypse and why he still pushes out with the technology stands out.

Amodei is not alone in expressing unrest, but he is in a rare position. As CEO of the company behind Claude, he is not a passive observer. He forms the track for this technology in real time. His team builds the systems themselves whose potential and danger he weighs.

If someone told you that there was a 1-in-4-chance that your car would explode every time you turned the key, you may be able to start walking more. Amodei would apparently become a mechanic and check the car out first before he entered.

AI Downfall

This is also not the only warning issued by Amodei about AI. He warned before that AI could eliminate half of all jobs at Entry-Level and sounded the alarm against US exports of advanced chips to China. That’s what makes Amodei’s framing so useful. It recognizes the risk, quantifies the uncertainty but leaves room for agency.

On the flip side, Amodei’s “75% random things are going really well” not optimism for its own sake. It involves the belief that AI could provide enormous benefits to everyone. It can lead to improved medicine, more effective production and maybe even strategies for tackling existential crises such as climate change (although a key element to solve this may be the energy required for AI models to run).

However, the 25% risk requires these benefits to be carefully built taking into account safety measures and regulation. Because if the future is 75% brilliant and 25% ruined, the question is: What should we do to keep the weight on the right side?