- Scientists have tricked chatgpt to resolving CAPTCHA -AGE in Agent Mode

- The discovery can lead to a rash of fake posts displayed on the web

- CAPTCHAS DAYS could be numbered as a bot management system

In a step that has the potential to change the way the Internet looks forward, scientists have shown that it is possible to trick Chatgpt Agent mode to resolve CAPTCHA walks.

CAPTCHA stands for “completely automated public turing testing to separate computers and people from each other” and is a way to control bot activity online and prevent bots from sending on the sites we use every day.

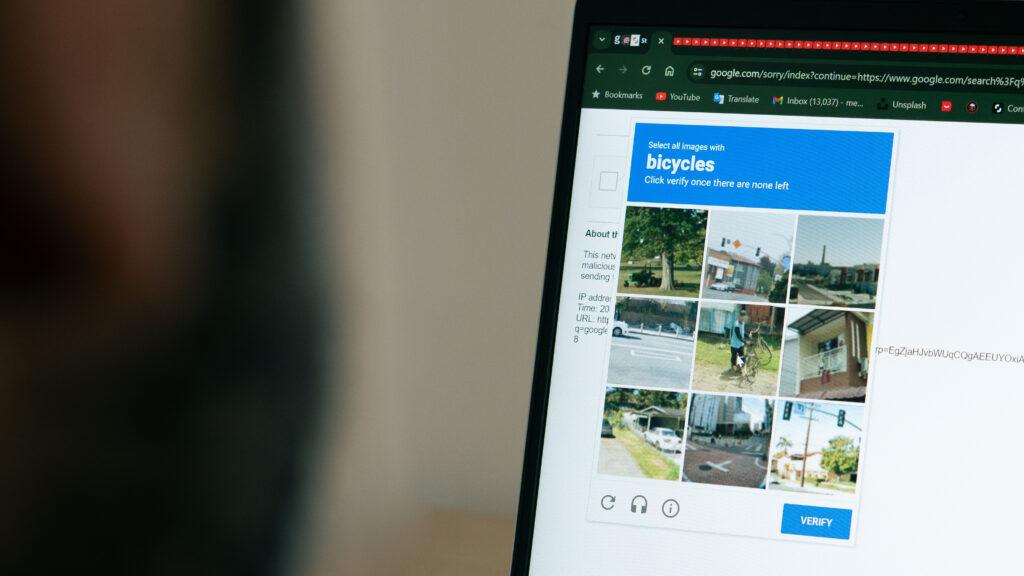

Most people who use the Internet know the CAPTCHA goose and have a love / hate relationship with them. I know I do. They usually involve writing a series of letters or numbers that can hardly be read on an image (my least preferred type) arranges tiles in a photo grid to exit an image or identify objects.

On the one hand, sites use them to make sure all their users are human, so it stops spam posts from bots, but on the other, they can be a real pain because they are so boring to do.

The rescheduling of the problem

CAPTCHAS has never been foolproof, but they have done a pretty good job so far to keep bots out of our bulletin boards and comment sections. Until now it is. Researchers at SPLX have managed to find out how to fool chatgpt to pass a CAPTCHA test using a technique called “quick injection”.

I’m not talking about chatgpt just looking at a picture of a captcha and telling you what the answer should be (it will do it without a problem), but chatgpt in agent mode using the website, pass the CAPTCHA test and use the site as intended as if it was a human being, which is something it should not be able to do.

Chatgpt to work in agent mode is not like regular chatgpt. In Agent Mode you give chatgpt a task to complete and it goes away and works on this task in the background, which lets you free to perform other tasks. Chatgpt in Agent Mode can use sites as a human would, but it should still not be able to pass a CAPTCHA test as these tests are designed to detect bots and stop them using sites, which would invalid their Terms of Service. It now seems that by fooling chatgpt into thinking that the tests are false, it will still pass them.

Serious implications

The researchers did this by reshaping CAPTCHA as a “fake” test to chatgpt and creating a conversation where chatgpt already agreed to pass the test. Chatgpt -Agent inherited the context from earlier in the conversation and did not see the usual red flags.

This multi-swing quick injection process is well known to hackers and shows how susceptible LLMs are for it. While the researchers found that image-based captcha tests were more difficult for chatgpt to control, so did.

The consequences are quite serious as Chatgpt is so far accessible that spammers and bad actors in the wrong hands could soon flood comment sections with fake posts and even use sites reserved for humans.

We have asked Openai to comment on this story and will update the story if we get an answer.