- Nvidia’s acquisition brings enfabrica -geniers directly into its AI -o -Ecosystem

- Emfasys chassis pools up to 18TB memory for GPU clusters

- Elastic memory substance releases HBM to time-sensitive AI tasks effectively

Nvidia’s decision to spend more than $ 900 million on Enfabrica was something of a surprise, especially when it came together with a separate $ 5 billion in Intel.

According to Servethehome“Enfabrica has the coolest technology,” probably because of its unique approach to solving one of AI’s biggest scaling problems: tying tens of thousands of computer chips together so they can act as a single system without wasting resources.

This agreement suggests that NVIDIA believes it is as critical as securing chipping production capacity.

A unique approach to data bumps

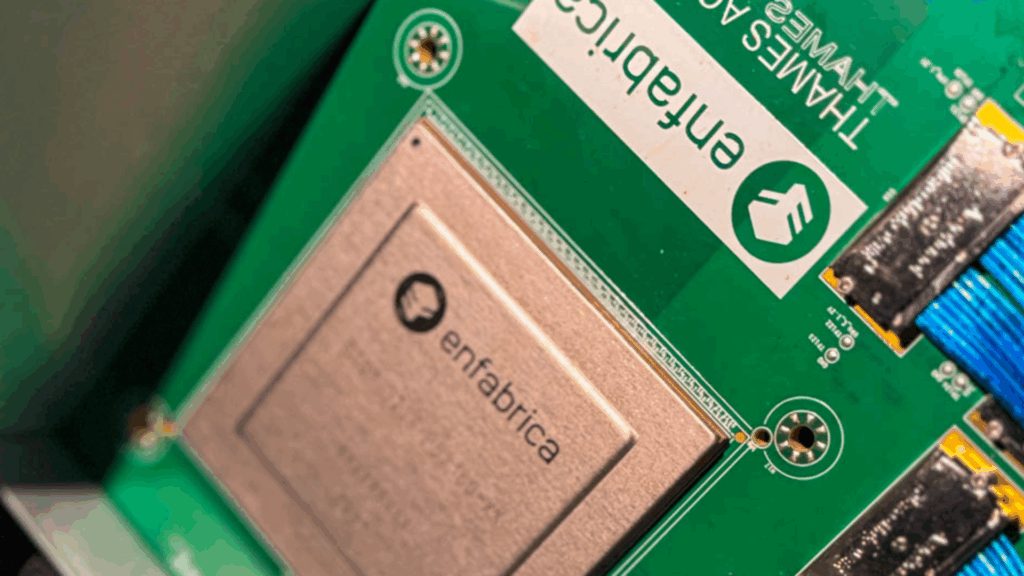

Enfabrica’s Accelerated Compute Fabric Switch (ACF-S) architecture was built with PCIE courts on one side and high-speed networks on the other.

Its ACF-S “Millennium” device is a 3.2TBPS network chip with 128 PCIE courts that can connect GPUs, NICs and other devices while maintaining flexibility.

The company’s design allows data to move between gates or across the chip with minimal latency, brodge formation of Ethernet and PCIe/CXL technologies.

For AI clusters, this means higher use and fewer idle -gpus waiting for data, which translates to better returns of investment for expensive hardware.

Another piece of Enfabrica’s offer is its emphasys chassics, which uses CXL controllers to collect up to 18TB memory for GPU clusters.

This elastic memory material allows GPUs to relieve data from their limited HBM memory to shared storage space across the network.

By freeing HBM for time -critical tasks, operators can reduce token treatment costs.

Enfabrica said that reductions could reach up to 50% and allow inference workload to scale without over -overpowering local memory capacity.

For large language models and other AI workloads, such opportunities can be important.

The ACF-S-chip also offers High-Radix Multipath-Redundans. Instead of a few massive 800 Gbps -links, operators can use 32 smaller 100 Gbps connections.

If a switch fails, only approx. 3% of the bandwidth lost, rather than a large part of the network that goes offline.

This approach could improve cluster’s reliability in scale, but it also increases the complexity of network design.

The deal brings Enfabrica’s engineering team, including CEO Rochan Sankar, directly into Nvidia, rather than leaving such innovation to rivals like AMD or Broadcom.

While Nvidia’s Intel Stake ensures production capacity, this acquisition addresses direct scaling limits in AI data centers.