- Nvidia integrates Samsung Foundry to extend NVLink Fusion for custom AI silicon

- NVLink Fusion allows CPUs, GPUs and accelerators to communicate seamlessly

- Intel and Fujitsu can now build CPUs that connect directly to Nvidia GPUs

Nvidia is deepening its efforts to make itself indispensable in the AI landscape by expanding its NVLink Fusion ecosystem.

Following a recent collaboration with Intel, which enables x86 CPUs to connect directly to Nvidia platforms, the company has now hired Samsung Foundry to help design and manufacture custom CPUs and XPUs.

The move, announced during the 2025 Open Compute Project (OCP) Global Summit in San Jose, shows Nvidia’s ambition to expand its control across the entire hardware stack of AI computing.

Integration of new players into NVLink Fusion

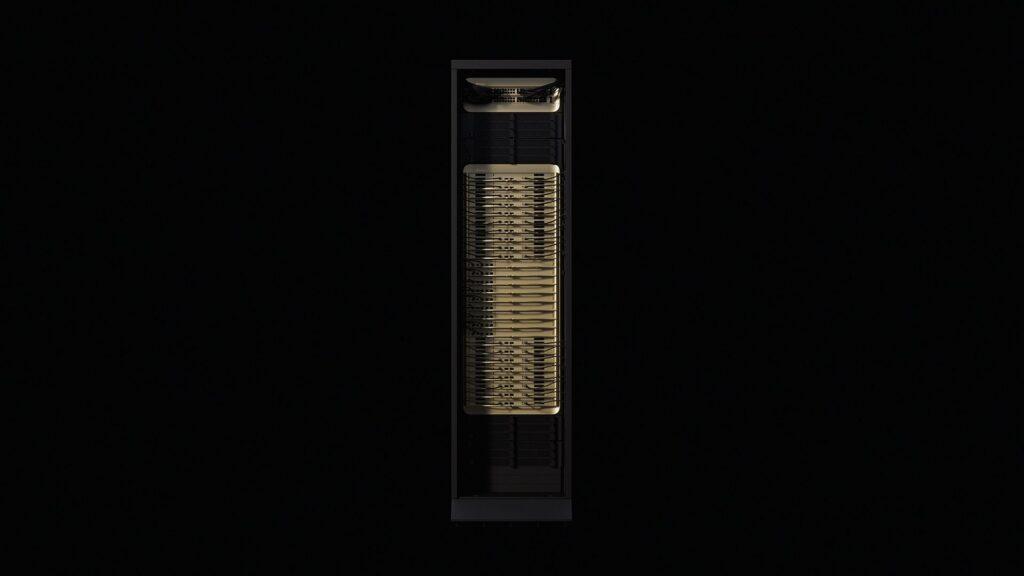

Ian Buck, Nvidia’s Vice President of HPC and Hyperscale, explained that NVLink Fusion is an IP and chiplet solution designed to seamlessly integrate CPUs, GPUs and accelerators into the MGX and OCP infrastructure.

It enables direct high-speed communication between processors within rack-scale systems, aiming to remove the traditional performance bottlenecks between computing components.

During the summit, Nvidia revealed several ecosystem partners, including Intel and Fujitsu, both of which are now able to build CPUs that communicate directly with Nvidia GPUs over NVLink Fusion.

Samsung Foundry joins this list, offering full design-to-manufacturing expertise for custom silicon, an addition that strengthens Nvidia’s reach in semiconductor manufacturing.

The collaboration between Nvidia and Samsung reflects a growing shift in the AI hardware market.

As AI workloads expand and competition intensifies, Nvidia’s custom CPU and XPU designs aim to ensure its technologies remain central to next-generation data centers.

According to TechPowerUpNvidia’s strategy comes with strict limitations.

Custom chips developed under NVLink Fusion must connect to Nvidia products, with Nvidia retaining control over the communication controllers, PHY layers, and NVLink Switch licenses.

This gives Nvidia significant influence in the ecosystem, although it also raises concerns about openness and interoperability.

This tighter integration comes as rivals such as OpenAI, Google, AWS, Meta and Broadcom develop internal chips to reduce reliance on Nvidia hardware.

Nvidia is embedding itself more deeply into the AI infrastructure by making its technologies inevitable rather than optional.

Through NVLink Fusion and the addition of Samsung Foundry to its custom silicon ecosystem, the company is expanding its influence across the entire hardware stack, from chips to data center architectures.

This reflects a wider trend among its competitors. Broadcom moves deeper into artificial intelligence with custom accelerators for hyperscalers.

OpenAI is also reported to be designing its own internal chips to reduce reliance on Nvidia’s GPUs.

Together, these developments mark a new phase of AI hardware competition, where control of the silicon-to-software pipeline determines who leads the industry.

Nvidia’s partnership with Samsung looks to address this by accelerating the rollout of custom solutions that can be quickly deployed at scale.

By embedding its IP into broader infrastructure design, Nvidia is positioning itself as an essential part of modern AI factories, rather than just a GPU vendor.

Despite Nvidia’s contribution to the OCP open hardware initiative, its NVLink Fusion ecosystem maintains strict boundaries favoring its architecture.

While this can ensure performance benefits and ecosystem consistency, it can also raise concerns about vendor lock-in.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.