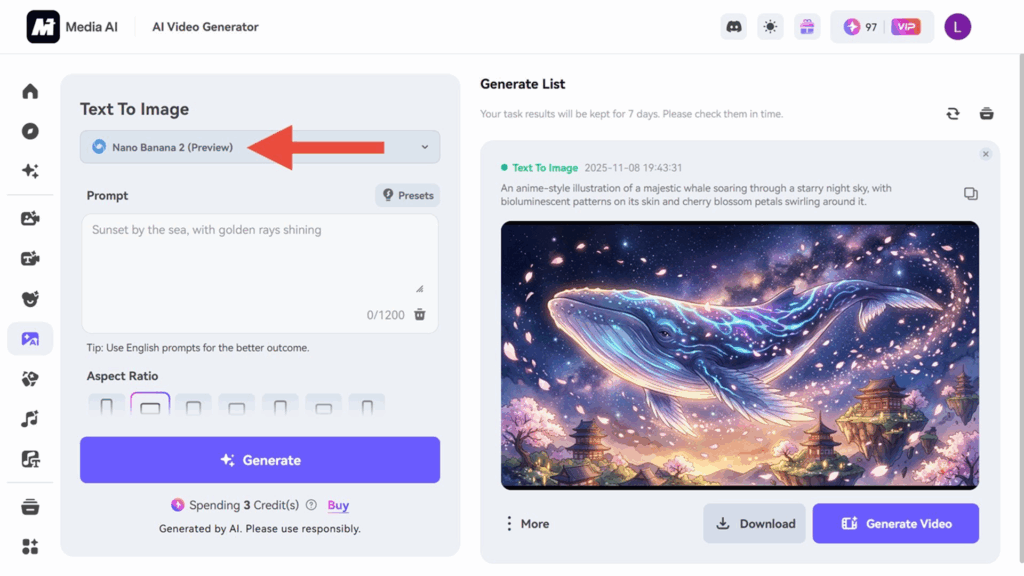

- Google’s Nano Banana 2 AI image generator appears in the preview

- The new model promises smarter, self-correcting image generation

- The new model mimics a human workflow in several steps

Google is up for another bite of advanced AI imaging with the Nano Banana 2. The follow-up to the original Nano Banana model will be part of the Gemini app, according to the preview that unexpectedly surfaced and was shared on X.

The Nano Banana 2 appears to provide better angular and visual control of images with more precision in coloring. There will also be an option to fix text in the image without messing with the rest of the output.

So the Nano Banana 2 will be more than just a resolution bump. The Nano Banana 2’s biggest shift may be how it thinks. The leaked previews suggest that the model now uses a multi-step workflow where it will plan the image before making it, analyze it for errors, fix them and repeat the process until it’s ready.

This kind of built-in self-correction is new to Google’s image tools. Behind the quirky fruit nickname, Google seems to treat AI image generation as a real design assistant, one that sketches out rough drafts, catches mistakes, and only gives you the final product after making sure it’s not terrible.

Nano Banana 2 is now in Preview 👀 currently on Media IO pic.twitter.com/VNmQM3PAFP8 November 2025

The images shared by those with access to the preview show cleaner lines, sharper angles and fewer tell-tale flaws in AI images.

“Nano Banana Pro” has started appearing in GitHub commits and code references, suggesting that Google is already preparing a more premium-tier version of the model for high-end or high-res tasks.

Appealing AI images

In the wild, the Nano Banana 2, known internally as the GEMPIX 2, has also started appearing in places outside of the main Gemini app. Testers have found traces of the model in experimental tools like Whisk Labs, part of Google’s ongoing effort to weave AI creativity into all its tools. If the past is any indication, this multi-surface rollout will follow the same playbook as the original Banana Drop, with users suddenly finding their photos look better.

The fact that the Nano Banana 2 goes through a self-correction loop before delivering the final image marks a philosophical shift. It’s not just reactive anymore. Google teaches its model to notice its own mistakes and proactively correct them. It sounds small, but it reflects the human creative process more than previous AI tools have.

Better scene understanding, angle control and text clarity should mean you’re more likely to get an image you actually want. Considering how the Nano Banana went viral for making action figure versions of people that looked like real products, it will be worth watching to see how well the Nano Banana 2 performs similar images in the real world. Judging by some of the images shared and their consistency in sticking to that characterization, you’ll be seeing some really realistic, unreal depictions of people and places very soon.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.

The best business laptops for all budgets