- Hyperlink runs exclusively on local hardware, keeping each search private

- The app indexes massive data folders on RTX PCs in minutes

- LLM inference on Hyperlink doubles in speed with Nvidia’s latest optimization

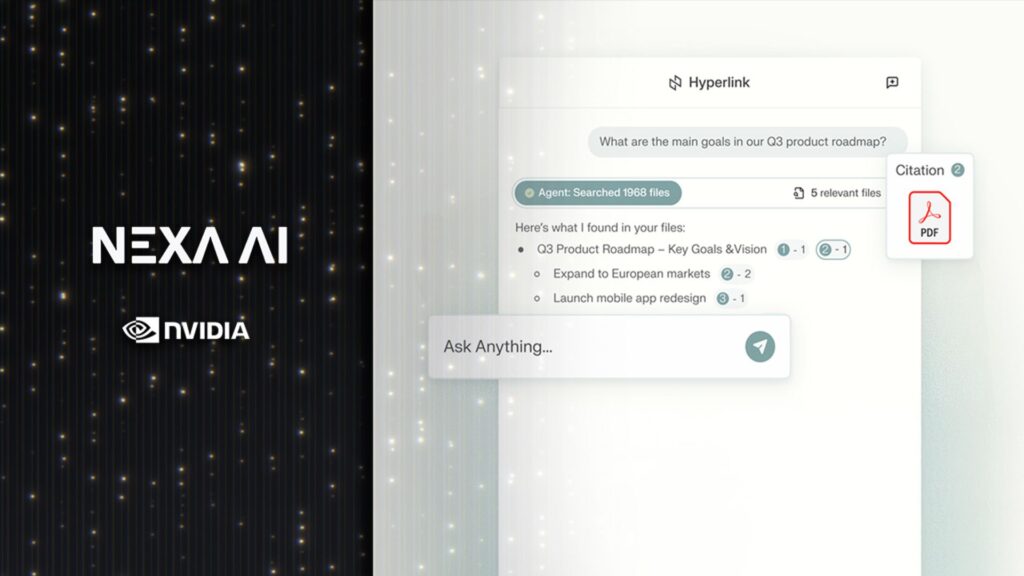

Nexa.ai’s new “Hyperlink” agent introduces an approach to AI search that runs exclusively on local hardware.

Designed for Nvidia RTX AI PCs, the app acts as an on-device assistant that transforms personal data into structured insights.

Nvidia outlined how instead of sending requests to remote servers, it processes everything locally, offering both speed and privacy.

Private intelligence at local speed

Hyperlink has been benchmarked on an RTX 5090 system, where it reportedly delivers up to 3x faster indexing and 2x the large language model inference speed compared to previous builds.

These metrics suggest that it can scan and organize thousands of files across a computer more efficiently than most existing AI tools.

Hyperlink doesn’t just match search terms, as it interprets user intent by applying LLM’s reasoning capabilities to local files, enabling it to find relevant material even when filenames are obscure or unrelated to the actual content.

This shift from static keyword search to contextual understanding aligns with the growing integration of generative AI into everyday productivity tools.

The system can also connect related ideas from multiple documents and offer structured answers with clear references.

Unlike most cloud-based assistants, Hyperlink stores all user data on the device, so the files it scans, from PDFs and slides to images, remain private, ensuring that no personal or confidential information leaves the computer.

This model appeals to professionals handling sensitive data who still want the performance benefits of generative AI.

Users get access to fast contextual responses without the risk of data exposure that comes with remote storage or processing.

Nvidia’s optimization for RTX hardware extends beyond search performance, as the company claims retrieval-augmented generation (RAG) now indexes dense data folders up to three times faster.

A typical 1GB collection that once took nearly 15 minutes to process can now be indexed in about 5 minutes.

The improvement in closing speed also means that answers appear faster, making everyday tasks such as meeting preparation, study sessions or report analysis easier.

Hyperlink combines convenience with control by combining local reasoning and GPU acceleration, making it a useful AI tool for people who want to keep their data private.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.