- Meta and Nvidia launch multi-year partnership for hyperscale AI infrastructure

- Millions of Nvidia’s GPUs and ARM-based CPUs will handle extreme workloads

- Unified architecture spans data centers and Nvidia cloud partner deployments

Meta has announced a multi-year partnership with Nvidia aimed at building hyperscale AI infrastructure capable of handling some of the largest workloads in the technology sector.

This collaboration will deploy millions of GPUs and Arm-based CPUs, expand network capacity, and integrate advanced data processing techniques to protect privacy across the company’s platforms.

The initiative seeks to combine Meta’s extensive production workloads with Nvidia’s hardware and software ecosystem to optimize performance and efficiency.

Uniform architecture across data centers

The two companies are creating a unified infrastructure architecture that spans local data centers and deployments by Nvidia cloud partners.

This approach simplifies operations while providing scalable, high-performance computing resources for AI training and inference.

“No one is deploying artificial intelligence at Meta’s scale—integrating front-line research with industrial-scale infrastructure to power the world’s largest personalization and recommendation systems for billions of users,” said Jensen Huang, founder and CEO of Nvidia.

“Through deep code design across CPUs, GPUs, networking and software, we’re bringing the entire Nvidia platform to Meta’s researchers and engineers as they build the foundation for the next AI frontier.”

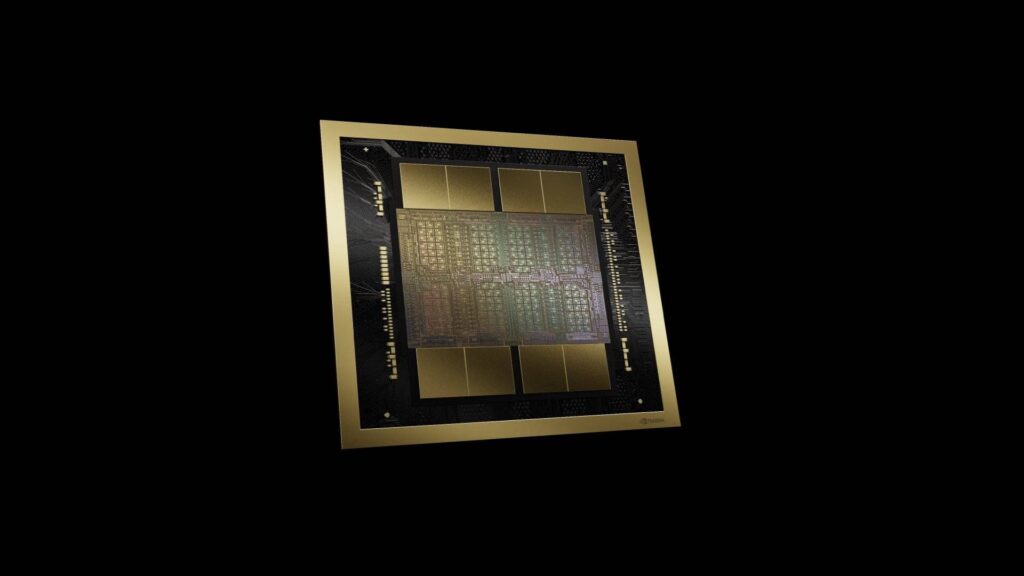

Nvidia’s GB300-based systems will form the backbone of these deployments. They will offer a platform that integrates compute, memory and storage to meet the demands of next-generation AI models.

Meta also extends Nvidia Spectrum-X Ethernet networking throughout its footprint and aims to deliver predictable, low-latency performance while improving operational and energy efficiency for large workloads.

Meta has started using Nvidia Confidential Computing to support AI-powered features in WhatsApp, allowing machine learning models to process user data while preserving privacy and integrity.

The collaboration plans to extend this approach to other Meta services by integrating privacy-enhancing AI techniques into more applications.

Meta and Nvidia engineering teams work closely together to design AI models and optimize software across the infrastructure stack.

By customizing hardware, software and workloads, companies aim to improve performance per watts and accelerate training for advanced models.

Large-scale deployment of Nvidia Grace CPUs is a core part of this effort, with the collaboration representing the first major Grace-only deployment at this scale.

Software optimizations in CPU ecosystem libraries are also implemented to improve throughput and energy efficiency for successive generations of AI workloads.

“We are excited to expand our partnership with Nvidia to build leading clusters using their Vera Rubin platform to deliver personal superintelligence to everyone in the world,” said Mark Zuckerberg, founder and CEO of Meta.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.