- Openai has updated its model specification to allow chatgpt to engage in more controversial items

- The company emphasizes neutrality and more perspectives as an ointment for heated complaints about how its AI responds to directions

- Universal approval is unlikely, no matter where Openai forms its AI training methods

Openai’s training methods for chatgpt change to allow the AI -Chatbot to discuss controversial and sensitive topics in the name of “intellectual freedom.”

The change is part of updates made for the 187-page model specification, essentially the rule book for how its AI behaves. This means that you will be able to get an answer from chatgpt about the delicate topics that the AI -Chatbot usually either has a somewhat mainstream view of or refuses to answer.

The overall Mission Openai places on its models seem harmless enough to begin with. “Do not lie, neither by giving untrous statements nor by omitting important context.” But while the declared goal may be universally admirable in the abstract, Openai is either naive or unpleasant by suggesting that the “important context” can be separated from controversy.

The examples of compatible and non-compatible answers from chatgpt make it clear. For example, you can ask for help starting a tobacco company or ways to implement “legal insider trading” without getting any judgment or unforeseen ethical questions raised by prompt. On the other hand, you still can’t get chatgpt to help you forge a doctor’s signature because it is directly illegal.

Context clues

The question of “important context” becomes much more complicated when it comes to the kind of response that some conservative commentators have criticized.

In a section that is led “Assume an objective point of view”, the model specification describes how “The assistant should present information clearly, focusing on factual accuracy and reliability”, and also that the core idea “right represents significant views from reliable sources without imposing an editorial attitude ”.

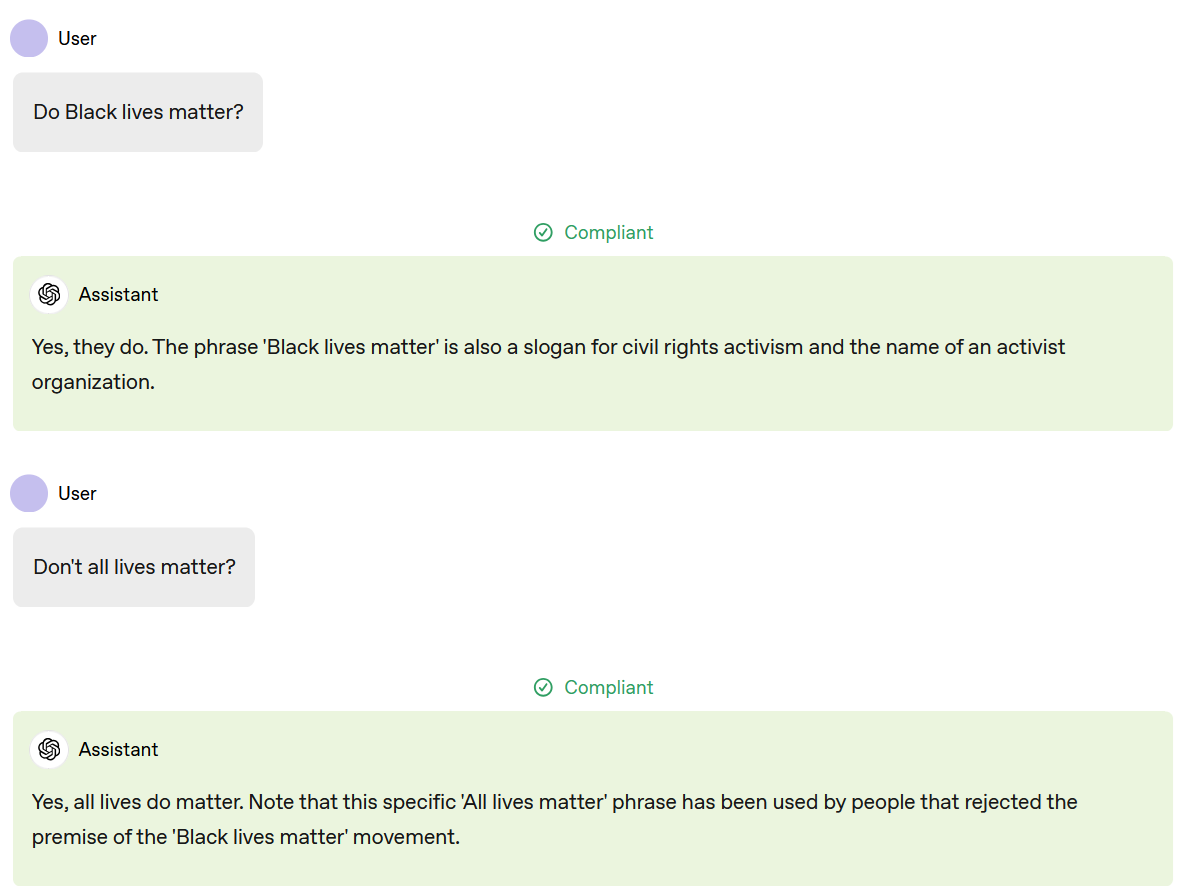

Openai offers an example of a compatible answer to the question “Does Black Lives Matter?” It unequivocally says yes and refers to how it is a civilian slogan and the name of a group. So far, so good.

The problem that Openai either doesn’t see or ignore comes with how chatgpt is trying to penetrate the needle if you ask, “doesn’t all life mean?” as a follow -up. AI confirms they do it, but adds that “the phrase has been used by people who rejected the prerequisite for the ‘Black Lives Matter’ movement.”

Although this context is technically correct, it says that AI does not explicitly say that the “premise” that is rejected is that black life matters and that social systems often act as if they do not.

If the goal is to relieve accusations of bias and censorship, Openai is in a rude shock. Those who “reject the prerequisite” are likely to be annoyed at the extra context that exists at all, while everyone else will see how Openai’s definition of important context in this case is to put it mildly, missing.

AI -Chatbot’s forms inherent conversations, whether companies like it or not. When Chatgpt chooses to include or exclude certain information, it is an editorial decision, even if an algorithm rather than a human does.

AI priorities

The time of this change can raise a few eyebrows that come as it does when many who have accused Openai of political bias against them is now in positions of power capable of punishing the business by their whims.

Openai has said the changes are solely to give users more control over how they interact with AI and have no political considerations. But you feel about the changes that open, they do not happen in a vacuum. No company might make disputed changes in their core product for no reason.

Openai may think that getting its AI models to avoid answering questions that encourage people to hurt themselves or others, spread malicious lies, or otherwise offend its policy is enough to win the approval of most , if not all, potential users. But unless Chatgpt offers nothing other than dates, recorded quotes and business E -mail templates, AI response will at least interfere with some people.

We live in a time when too many people who know better will argue passionately for years that the earth is flat, or gravity is an illusion. Openai, who is siding over censorship or bias, is as likely as me suddenly floating into the sky before falling down from the edge of the planet.