- Openai is expected soon to release Sora 2 AI -Video Model

- Sora 2 is facing fierce competition from Google’s VEO 3 model

- VEO 3 already offers features that Sora doesn’t do and Openai will need to improve both what Sora can do and how easy it is to use to lure possible customers

Openai seems to end the plans to release Sora 2, the next iteration of its text-to-video model, based on references discovered in Openai’s servers.

Nothing is officially confirmed, but there is evidence that Sora 2 will be a major upgrade that is fixed square on Google’s VEO 3 AI video model. It’s not just a race to generate more beautiful pixels; It’s about the sound and the experience of producing what the user imagines when he writes a quick one.

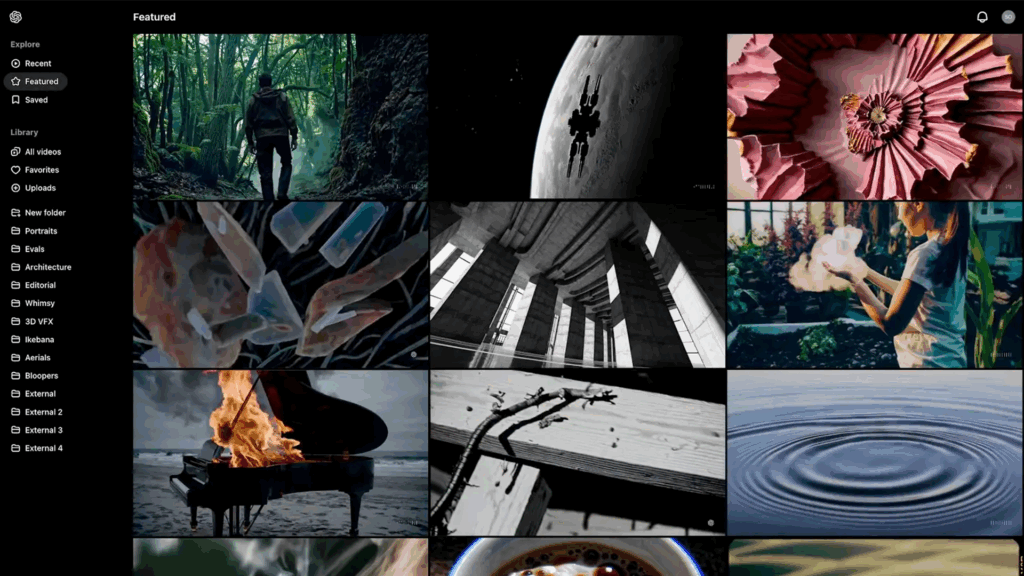

Openais Sora impressed many when it debuted with its high quality images. However, they were quiet movies. But when VEO 3 debuted this year, the short clip showed with speech and environmental sound baked in and synchronized. Not only could you see a man pouring coffee into slow motion, but you could also hear the gentle splashes of fluid, clinker of ceramics and even the hum of a dining room around the digital character.

To make Sora 2 stand out as more than just a smaller option for VEO 3, Openai has to find out how to sew credible voices, sound effects and surrounding noise in even better versions of its visuals. Getting sound on the right, especially lip sync is difficult. Most AI video models can show you a face that says words. The magic trick makes it look like these words actually came from that face.

It’s not that VEO 3 is perfect for matching audio to image, but there are examples of videos with surprisingly tight audio-to-mouth coordination, background music that matches the mood and effects that fit the video’s intention.

Granted a maximum of eight seconds per Video limits the possibility of success or failure, but credibility to the stage is necessary before considering duration. And it’s hard to deny that it can get videos that both look and sound like real cats jumping off high dive in a pool. Although Sora 2 can expand to 30 seconds or more with a stable quality, it is easy to see it attracting users looking for more space to create AI videos.

Sora 2’s film mission

Openais Sora can stretch up to 20 seconds or more of high quality video. And since it’s embedded in chatgpt, you can make it part of a larger project. This flexibility is significant to help Sora stand out, but the sound absence is remarkable. To compete directly with VEO 3, Sora 2 must find her voice. Not only do it find it but weave it smoothly into the videos it produces. Sora 2 may have good sound, but if it can’t overrial the seamless way VEO 3’s sound connects with its visuals, it may not matter.

At the same time, doing Sora 2 can cause its own problems. With each new generation of AI video model, there is more concern about blurrying the line with reality. Sora and VEO 3 do not allow requests involving real people, violence or copyrighted content. But adding audio offers a whole new dimension of control over the origin and use of realistic voices.

The other big question is pricing. Google has VEO 3 behind the Gemini Advanced Paywall and you really need to subscribe to $ 250 a month AI Ultra Tier if you want to use VEO 3 all the time. Openai may bundle access to Sora 2 in Chatgpt Plus and Pro Tiers in a similar way, but if it can offer more to the cheaper level, it will probably quickly expand its user base.

For the average person, the AI video tool they are addressing will be related to the price, as well as ease of use, as much as the features and quality of the video. There is a lot of Openai that have to do if Sora 2 will be more than a quiet blip in the AI race, but it looks like we find out how good it can compete soon.