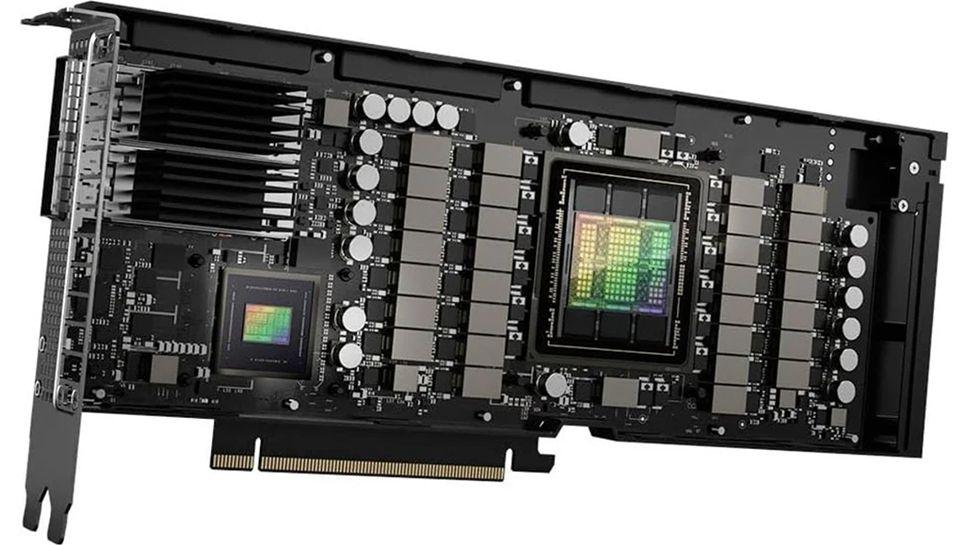

- Nvidia’s H800 was launched in March 2023 and is a cut off version of H100

- It is also markedly slower than Nvidia’s H200 and AMDS instinct series

- These artificial restrictions have forced Deepseeks technique to innovate

It was widely assumed that the United States would remain undisputed as the global AI superpower, especially after President Donald Trump’s recent announcement of Project Stargate -an initiative of $ 500 billion to strengthen AI infrastructure across the United States. This week, however, there was a seismic shift with the arrival of China’s Deepseek. Deepseek evolved into a fraction of the cost of its American rivals and came out and apparently out of nowhere and had such an impact that it dried up $ 1 trillion from the market value of the US tech stock, with Nvidia the largest accident.

Of course, everything developed in China will be very secret, but a technical paper that was published a few days before the chat model stunned AI viewers give us some insight into the technology that drives the Chinese equivalent to Chatgpt.

In 2022, the United States blocked the import of advanced NVIDIA GPUs to China to tighten control over critical AI technology and have since imposed further restrictions, but it is obviously not stopped Deepseek. According to the paper, the company trained its V3 model on a cluster of 2,048 NVIDIA H800 GPUs – paralyzed versions of the H100.

Exercise on cheap

The H800, launched in March 2023, to comply with export restrictions to China and have 80 GB HBM3 memory with 2TB/s bandwidth.

It hangs behind the newer H200, which offers 141 GB HBM3E memory and 4.8 TB/S bandwidth, and AMD’s instinct MI325X, which exceeds both 256 GB HBM3E memory and 6TB/s bandwidth.

Each knot in cluster Deepseek trained on houses 8 GPUs associated with NVLink and NVSWitch for intra-node communication, while infiniband connections handle communication between nodes. The H800 has lower NVLINK tape width compared to the H100, and this naturally affects Multi-GPU communication performance.

Deksek-V3 required a total of 2.79 million GPU hours for prior and fine-tuning of 14.8 trillion tokens using a combination of pipeline and data parallelism, memory optimizations and innovative quantization techniques.

The next platformwhich has made a deep dive in how Deepseek works, “at a price of $ 2 per GPU hour – We have no idea if it is actually the prevailing price in China – then it only cost $ 5.58 millions to train v3. “