- Future AI -Memory Chips could require more power than whole industrial zones combined

- 6TB memory in a GPU sounds amazing until you see the stream -drawing

- HBM8 stacks are impressive in theory but frightening in practice for any energy conscious business

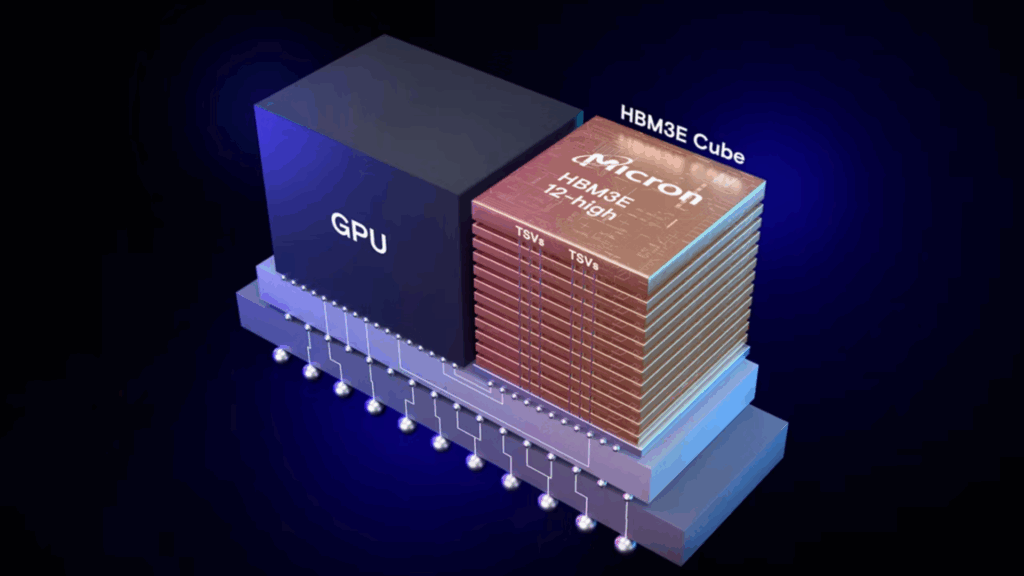

The relentless drive to expand the AI processor power is introducing a new era of memory technology, but it comes at a price that raises practical and environmental concerns, experts have warned.

Research from the Korea Advanced Institute of Science & Technology (KAIST) and Terabyte Interconnection and Package Laboratory (TERA) suggests by 2035, AI GPU accelerators equipped with 6 TB HBM could become a reality.

Although these developments are technically impressive also highlights the steep power requirements and increases the complexity involved in pushing the boundaries of AI infrastructure.

Ladder in AI GPU — Memory Capacity brings enormous power consumption

The timetable reveals the development from HBM4 to HBM8 will deliver big gains in bandwidth, memory stacking and refrigeration techniques.

From 2026 with HBM4, Nvidia’s Rubin and AMD’s instinct MI400 platforms will incorporate up to 432 GB of memory where bandwidth reaches almost 20 TB/s.

This memory type uses directly to-chip fluid cooling and custom packaging methods for handling current densities around 75 to 80W per day. Stack.

HBM5, projected in 2029, doubles the input/output courts and moves towards submerging cooling, with up to 80 GB per day. Stack that consumes 100W.

However, the power requirements will continue to climb with HBM6, expected in 2032, pushing bandwidth to 8TB/s and stack capacity to 120 GB, each drawing up to 120W.

These numbers are added quickly when considering full GPU packages that are expected to consume up to 5,920W per day. Chip assuming 16 HBM6 stacks in a system.

At that time HBM7 and HBM8 arrive, the number extends to previous unimaginable territory.

HBM7, expected around 2035, tripled bandwidth to 24TB/s and enables up to 192 GB per year. Stack. Architecture supports 32 memory stacks and pushes the total memory capacity beyond 6TB, but the power requirement reaches 15,360W per day. Package.

The estimated 15,360W power consumption marks a dramatic increase that represents a seven -fold increase in just nine years.

This means that a million of these in a data center would consume 15,36GW, a number that is roughly equal to Britain’s entire wind generation capacity on land in 2024.

HBM8, projected in 2038, extends additional capacity and bandwidth by 64 TB/s per day. Stack and up to 240 GB of capacity using 16,384 I/O and 32 Gbps speeds.

It also has coaxial TSV, embedded cooling and double -sided interposers.

The growing demands of AI and Large Language Model (LLM) inferens have driven researchers to introduce concepts such as HBF (High-Bandwidth Flash) and HBM-Centered Computing.

These designs propose to integrate NAND-FLASH and LPDDR memory into the HBM stack, as they depend on new cooling methods and interconnections, but their feasibility and reality efficiency are still proven.