- GTIG finds that threat actors are cloning mature AI models using distillation attacks

- Sophisticated malware can use AI to manipulate code in real-time to avoid detection

- State-sponsored groups create highly convincing phishing kits and social engineering campaigns

If you have used any modern AI tools, you will know that they can be of great help in reducing the tedium of mundane and burdensome tasks.

Well, it turns out that threat actors feel the same way, as the latest Google Threat Intelligence Group AI Threat Tracker report has found that attackers are using AI more than ever.

From figuring out how AI models reason to clone them, to integrating it into attack chains to bypass traditional network-based detection, GTIG has outlined some of the most pressing threats – here’s what they found.

How threat actors use artificial intelligence in attacks

Initially, GTIG found that threat actors are increasingly using ‘distillation attacks’ to rapidly clone large language models for use by threat actors for their own purposes. Attackers will use a huge amount of prompts to figure out how the LLM reasons with queries, and then use the answers to train their own model.

Attackers can then use their own model to avoid paying for the legitimate service, use the distilled model to analyze how the LLM is built, or search for ways to exploit their own model that can also be used to exploit the legitimate service.

AI is also being used to support intelligence gathering and social engineering campaigns. Both Iranian and North Korean state-sponsored groups have used AI tools in this way, with the former using AI to gather information about business relationships to create a pretext for contact, and the latter using AI to compile intelligence to help plan attacks.

GTIG has also seen an increase in AI use to create highly convincing phishing kits for mass distribution to harvest credentials.

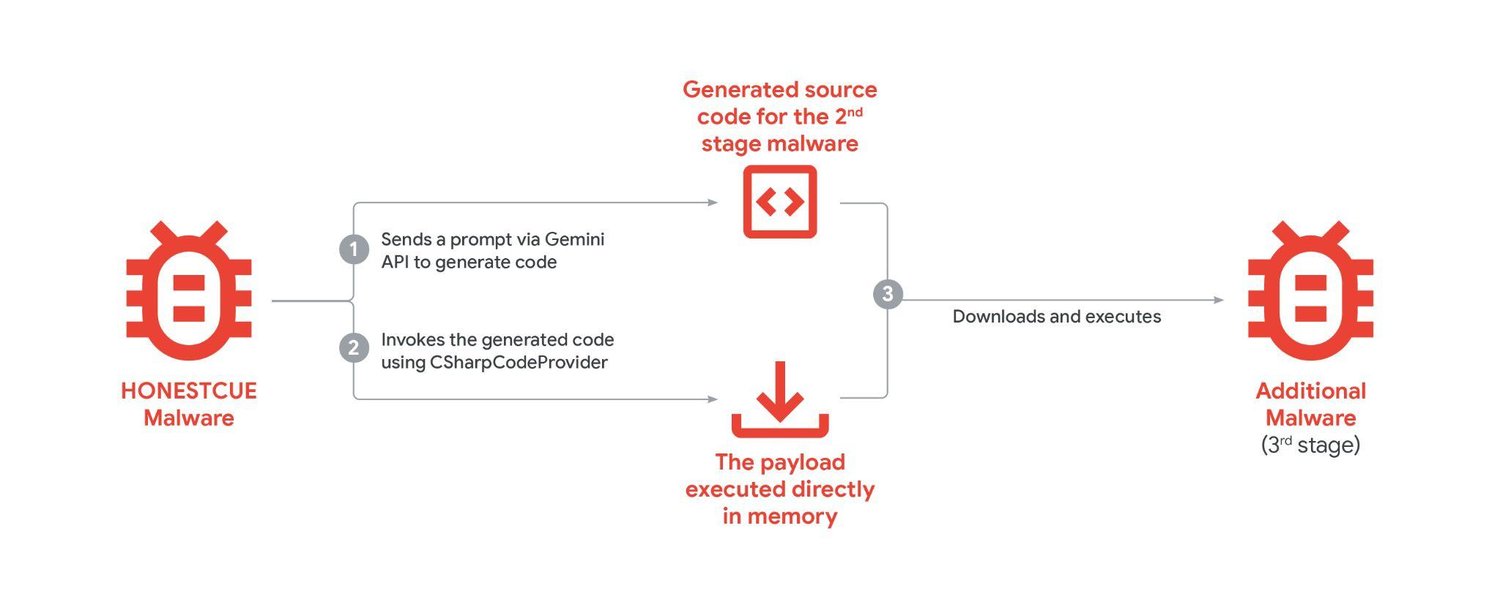

Additionally, some threat actors integrate AI models into malware to allow it to adapt to avoid detection. One example, tracked as HONESTCUE, evaded network-based detection and static analysis by using Gemini to rewrite and execute code during an attack.

But not all threat actors are the same. GTIG has also noted that there is serious demand for custom AI tools built for attackers, with specific calls for tools capable of writing code for malware. So far, attackers rely on using distillation attacks to create custom models to use offensively.

However, if such tools were to become widely available and easy to distribute, it is likely that threat actors would quickly adopt malicious AI into attack vectors to improve the performance of malware, phishing and social engineering campaigns.

To defend against AI-augmented malware, many security solutions are deploying their own AI tools to fight back. Instead of relying on static analysis, AI can be used to analyze potential threats in real-time to recognize the behavior of AI-augmented malware.

AI is also being used to scan emails and messages to spot phishing in real time on a scale that would require thousands of hours of human work.

In addition, Google is actively looking for potentially malicious use of artificial intelligence in Gemini and has implemented a tool to help find software vulnerabilities (Big Sleep) and a tool to help repair vulnerabilities (CodeMender).

The best antivirus for all budgets