- Apple is said to still work on cameras in AirPods

- iOS 18s visual intelligence is the core of Apple’s plans

- Features are still “generations away”

We have known “what” for some time – Apple is experimenting with cameras in its AirPods – and now we may know “why”. A new report sheds light on Apple’s plans for future AirPods, and if the technology can do what it promises to do, it can be a really important personal security feature.

However, there is an important warning: The features are “still at least generations away from hitting the market”.

The report comes from the well -connected Mark Gurman at Bloomberg, who says that “Apple’s ultimate plan for visual intelligence goes far beyond the iPhone.” And AirPods is a big part of this plan.

According to Gurman, Visual Intelligence – recognition of the world around you and provides useful information or help – as a very large deal inside Apple, and it plans to place cameras in both Apple Watch and Apple Watch Ultra. As with AirPods, “This would help the device to see the outside world and use AI to provide relevant information.”

How AirPods will work with visual intelligence

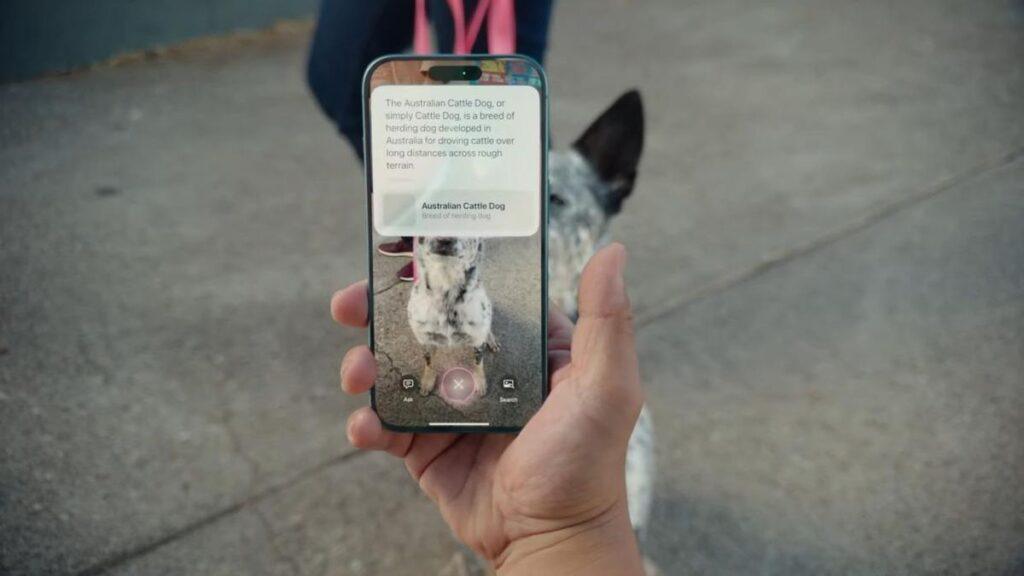

Visual Intelligence was introduced in iOS 18 to iPhone 16, and it allows you to point the camera to something and find out more about it: the type of plant, the dog’s breed (as in the picture at the top of this article), the opening hours of the café you just found and so on.

Visual intelligence can also translate text, and maybe one day it will be able to help people like me who have a shockingly bad memory for names and faces.

However, the big problem with visual intelligence is that you have to get your phone out to do so. And there are circumstances where you don’t want to do it. I am reminded when Apple brought cards to Apple Watch: By making it possible to use cards without broadcasting “I’m not from here and I’m hopelessly lost. I also have a very expensive phone” for all neighborhood villains, it was an important personal security feature.

This can also be. If Apple makes it possible to invoke visual intelligence with a point upside down and a pinch of the stems, it would allow you to get important information – such as a translation of a direction sign in another country – without waving your phone around.

We are far from actually having these features – don’t expect them in AirPods Pro 3, which will probably arrive later in 2025. But I’m excited about the prospects: Imagine Apple Intelligence, but good.