Comino gave the headlines with the launch of Grando, its water-cooled AMD-based workstation with eight NVIDIA RTX 5090 GPUs. During the extensive email exchange I had with his CTO/co-founder and commercial director, I found that Grando is far more versatile than I had come to expect.

Dig in his configurator and one will note that you can configure the system with up to eight RX 7900 XTX GPUs because, why not?

“Yes, however, we can pack 8x 7900XTX with an increased leading time. In fact, we can pack all 8 GPUs + EPYC in a single system, ”Alexey Chistov, CTO for Comino, told me when I asked further.

Although it does not currently offer Intel’s promising ARC GPU, if the market requires such solutions.

“We can design a water block for any GPU, it takes about a month” highlighted Chistov, “but we do not go for all sorts of GPUs, we choose specific models and brands. We only go to high-end GPUs to justify the extra cost of fluid cooling, because if it could properly work air-cooled-why does it bother? We try to stay with 1 or 2 different models per day. Generation for not having more SKUs (inventory storage units) of water blocks. You can have an RTX 4090, H200, L40S or any other GPU that we have a water block in a single system if your work will benefit from such a combination. “

Rimac of HPC

So how can Comino achieve such flexibility? The company throws itself as an engineering company with its slogan proudly and says “constructed, not only together”. Think of Comino as Rimac of HPC: obscene powerful, quick, agile and animal. Like Rimac, it focuses on the tip of its line with business and absolute performance.

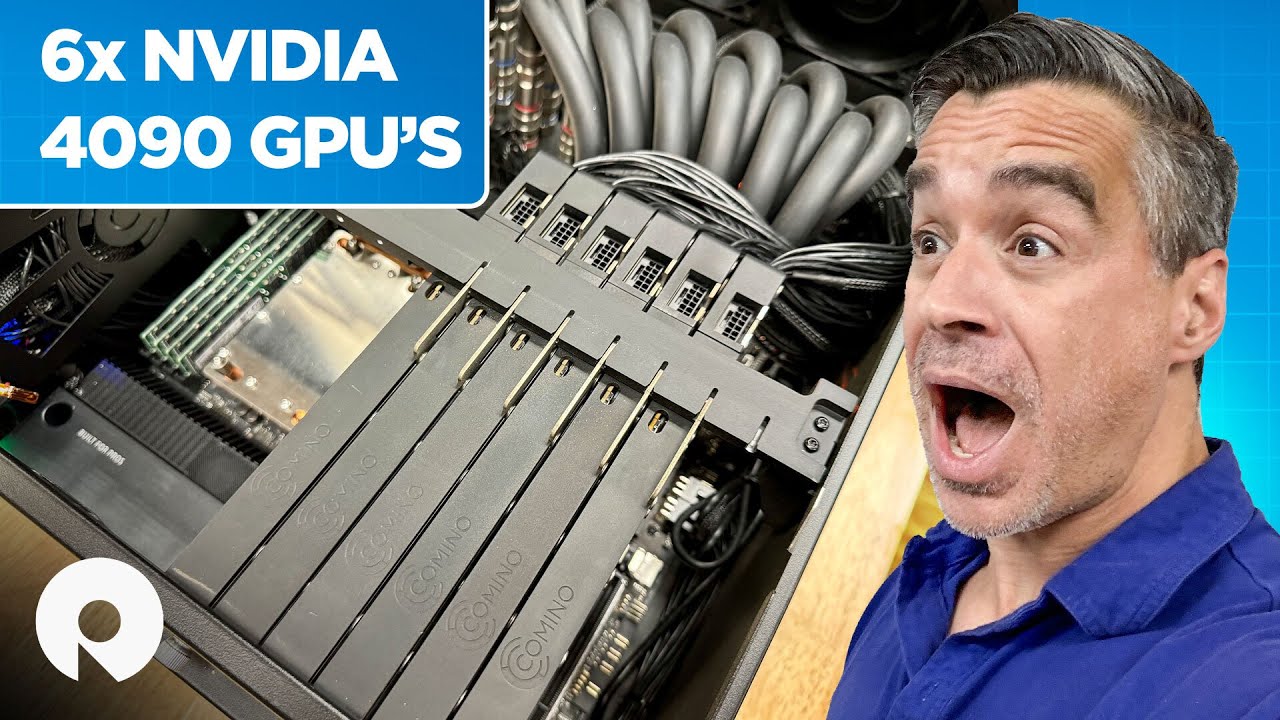

Its flagship product, Grando, is fluid-cooled and was designed to accommodate up to eight GPUs from the start, which means that it is very likely to be future-proof for several NVIDIA generations; More about that a little.

One of their most important goals, Chistov, told me, “is always to fit a single PCI slot, that’s how we can fill in all PCIe slots on the motherboard and fit eight GPUs on a Grando server. The chassis is also designed by the Comino team so everything works as “one”. This is how a triple castle GPU like the RTX 5090 can be changed to fit into a single castle.

With that in mind, it prepares a “solution that is capable of functioning on the coolant temperature of 50 ° C without throttling, so if you release the coolant temperature to 20C and set the coolant flow to 3-4 l/m, the water block can remove about 1800W of the heat from the 5090 chip with the chip temperature around 80-90c ”

That is right, A single Comino GPU water block could remove 1800W heat from a single “hypothetical 5090” It could generate the amount of heat if the coolant temperature of the inlet is about 20 degrees Celsius and if the coolant flow is not less than 3-4 liters per liter. Minute.

Packing eight of such “hypothetical GPUs” and some other components could lead to a total system power draw of 15 kW, and in fact if such a full load system would have a constant coolant temperature of 20C and coolant current per hour. Water block not less than 3-4 liters per liter. Minute, such a system would work “normal”.

Who needs that kind of achievement?

So what kind of users spray out on multi-gpu systems. Chistov, again. “There is no advantage of adding another 5090 if you are a player, this will not affect the performance because games cannot use more GPUs as they used to use SLI or even Directx at some point. There are several applications we are focused on for multi-gpu systems:

- AI -Inference: This is the most sought -after workload. In such a scenario, each GPU works “alone” and the reason for packing multiple GPUs per day. NODE is to reduce “cost per GPU”, while scaling: Save rack space, spend less money for non-GPU hardware, etc. Each GPU in a system is used to treat AI requests, mostly generative AI, for example stable Diffusion, Midjourney, Dall-E

- GPU Release: Popular workload, but doesn’t always scale well and add more GPUs, such as octane and V-beam (~ 15% less performance per GPU @ 8-GPUs) scale pretty good, but Redshift does not (~ 35-40% less performance per GPU @ 8-GPUs)

- Life Science: Different types of scientific calculations, foeless Cryospark or Relion.

- Any GPU-bound workload in a virtualized environment. Using Hyper-V or other software you can create multiple virtual machines to run any task, such as external workstation. As Storagerview did with Grando and six RTX 4090 GPUs it had on a review.

Specifically for the RTX 5090, the most important improvement of AI workloads is 50% improvement in memory capacity (up to 32 GB), which means that Nvidia’s new flagship is better suited for inference as you can put a much larger AI model in the memory. Then there is the much higher memory bandwidth that also helps.

Look at

In his review of the RTX 5090, Techradar’s John Loeffler calls it the Supercar of Graphics card and asks if it was simply too powerful, which suggests it is an absolute glutton for wattage.

“It’s excessive,” he quips, “especially if you only want it for games, as screens that can really handle the frames this GPU can put is probably year away.”