- MI355X leads AMD’s new MI350 series with 288 GB memory and full fluid-cooled performance

- AMD falls APU integration focusing on rack-scale GPU flexibility

- FP6 and FP4-Datatypes highlight the MI355X’s infernic optimized design selection

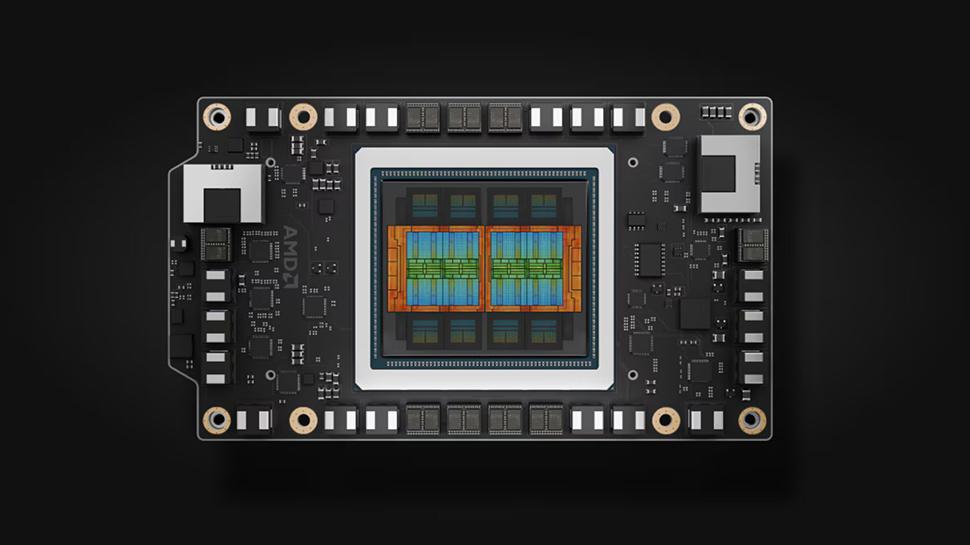

AMD has revealed its new MI350X and MI355X GPUs for AI workload on its 2025 promote AI event and offers two options built on its latest CDNA 4 architecture.

While both share a common platform, the MI355X separates itself as the higher performance, fluid -cooled variant designed for demanding, large -scale installations.

MI355X supports up to 128 GPUs per Rack and delivers high flow for both training and inference workload. It has 288 GB HBM3E memory and 8TB/s memory bandwidth.

GPU design only

AMD claims that the MI355X delivers up to 4 times the AI calculation and 35 times the inference performance for its previous generation thanks to architectural improvements and a move to TSMC’s N3P process.

Inside, the chip includes eight calculation dies with 256 active computer devices and a total of 185 billion transistors, marking a 21% increase over the previous model. Each matrix connects through redesigned I/O tiles, reduced from four to two to double internal bandwidth while lowering the power consumption.

The MI355X is only a GPU design that drops the CPU GPU APU approach used in the MI300A. AMD says this decision better supports modular implementation and rack-scale flexibility.

It connects to the host via a PCIe 5.0 X16 interface and communicates with peer GPUs using seven Infinity fabric links and reaches over 1 TB/SI GPU-to-GPU bandwidth.

Each HBM stack pairs with 32 MB Infinity cache, and the architecture supports newer formats with lower precision such as FP4 and FP6.

The MI355X runs FP6 operations with FP4 rates, a feature AMD highlights as beneficial for inference-heavy workloads. It also offers 1.6 times the HBM3E memory capacity for Nvidia’s GB200 and B200, although the memory bandwidth remains the same. AMD claims a 1.2x to 1.3x inference performance over Nvidia’s top products.

The GPU pulls up to 1,400W in its fluid -cooled form and delivers higher performance sight per day. Rack. AMD says this improves TCO by allowing users to scale calculate without expanding the physical footprint.

The chip fits into standard OAM modules and is compatible with UBB platform servers and speeds up the implementation.

“AI’s world doesn’t slow down – nor are we,” said Vamsi Boppana, SVP, AI Group. “At AMD we not only keep up, we set the bar. Our customers require real, implementable solutions that are scaled, and that is exactly what we deliver with the AMD instinct MI350 series. With groundbreaking performance, massive memory bandwidth and flexible, open infrastructure, we empower innovators across industries to go faster, scale, scale builds.

AMD is planning to launch its instinct MI400 series in 2026.