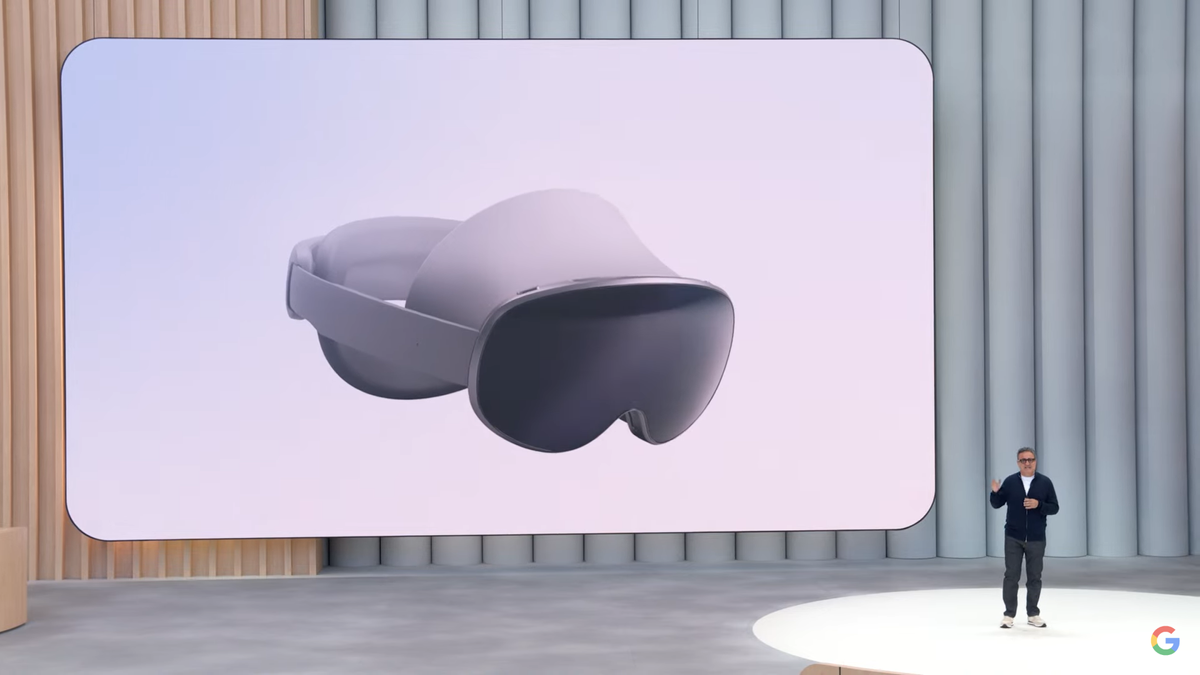

Google and Samsung’s project Moohan Android XR headset is not entirely new – my colleague Lance Ulanoff already broke down what we knew about it back in December 2024. But so far no one on Techradar had the chance to try it.

It changed shortly after Sundar Pichai stepped out of the Google I/O 2025 scene. I had a short but revealing seven minute demo with the headset.

After scanning my prescription lenses and matching them with a compatible set from Google, they were inserted into the Moohan headset project and I was quickly submerged in a quick demonstration.

It was not a full experience – more a quick taste of what Google’s Android XR platform is shaping itself to be, and much at the opposite end of the spectrum compared to the polished demo of Apple Vision Pro I experienced on WWDC 2023.

Project Moohan feels even similar to Vision Pro in many ways, although it is clearly a little less premium. But one aspect stood for everything: the integration of Google Gemini.

“Hi Gemini, which tree do I look at?”

Like Gemini lives on an Android like Pixel 9 – Google’s AI assistant takes the center of Project Moohan. The launcher includes two rows of core Google Apps – photos, Chrome, YouTube, Maps, Gmail and more – with a dedicated icon to Gemini at the top.

To select icons, press the thumb and index finger together and mimic Apple Vision Pro’s main control. Once activated, the well -known Gemini Live Bottom Bar will appear. Thanks to the headset’s built -in cameras, Gemini can see what you see.

In the press lounge on Shoreline Amphitheatre I looked at a nearby tree and asked, “Hey Gemini, which tree is this?” It quickly identified a type of Sycamore and delivered a few facts. The whole interaction felt smooth and surprisingly natural.

You can also give Gemini access to what’s on your screen and turn it into a hands-free controller for the XR experience. I asked it to pull up a map of Asbury Park, New Jersey, and then launched in immersive views – effectively dropping in a full 3D rendering similar to Google Earth. Lowering my head gave me a clear view below and squeezing and drawing helped me navigate around.

I jumped to a restaurant in Manhattan, asked Gemini to show internal photos and followed up by requesting reviews. Gemini replied with relevant YouTube videos of the eatery. It was a convincing multi-step AI-DEMO and it worked impressively well.

That’s not to say that everything was flawless. There were a few slowdowns, but Gemini was easily the highlight of the experience. I got away and wanted more time with it.

Hardware impressions

Although I had only the headset briefly, it was clear that although it shares some design signals with Vision Pro, Project Moohan is noticeably lighter-dog not so advanced in sensation.

After inserting the lenses, I put the headset on as a visor at the front and back strap over my head. A dial at the back let me tighten the pass easily. Press the power button on top on top adjusted the lenses automatically to my eyes with an internal mechanism that subtly relocated them within seconds.

From there, I used the main control movement – rotating my hand and tapping your thumb to the index finger – to get the laun up. This gesture seems to be the primary interface for now.

Google mentioned eye tracking will be supported, but I didn’t get to try it during this demo. Instead, I used hand tracking to navigate, as as a person who was familiar with Vision Pro felt a bit unintuitive. I am glad that the eye -tracking is on the timetable.

Google also showed a depth effect for YouTube videos that provided motion elements – such as camels running or grassing blowing in the wind – a slight 3D feeling. However, some visual layers (like mountain peaks that flow strangely in front of clouds) did not completely. The same effect was applied to still images on Google photos, but these lacked emotional weight unless the images were personal.

Where project moohan stands out

@Techradar ♬ Original Sound – Techradar

The prominent feature is the tight Gemini integration. It’s not just a tool for control-it’s an AI-driven lens on the world around you, making the device feel really useful and exciting.

It is important that Project Moohan did not feel burdensome to wear. While neither Google nor Samsung have confirmed its weight – and yes, there is a wiring pack I slid into my coat -pocket – it remained comfortable during my short time with it.

There is still a lot we need to learn about the last headset. Project Moohan is expected to be launched by the end of 2025, but so far it is still a prototype. Still, if Google gets pricing and ensures a strong range of apps, games and content, this can be a compelling debut in the XR room.

Unlike Google’s previous Android XR glasses prototype, Project Moohan feels far more tangible with an actual launch window in sight.

I briefly tried the previous glasses, but they were more like Gemini-On-your-Face in a prototype form. Project Moohan feels like it has legs. Let’s just hope that it lands at the right price point.