- Gemini Robotics is a new model

- It focuses on the physical world and will be used by robots

- It is visually, interactive and generally

Google Gemini is good for many things that happen on a screen, including generative text and images. Still, the latest model, Google Robotics, is a visual language -Action model that moves the generative AI into the physical world and can essentially speed up the humanoid robot revolution.

Gemini Robotics, as Google’s Deepmind revealed on Wednesday, improves Gemini’s abilities in three key areas:

- Dexterity

- Interactivity

- Generalization

Each of these three aspects affects the success of robotics in the workplace and unknown environments.

Generalization gives a robot the opportunity to take Gemini’s enormous knowledge of the world and things, apply it to new situations and perform tasks on which it has never been trained. In a video, scientists show a few robotic arms controlled by Gemini Robotics, a table-top basketball game, and ask it to “smash the dunk basketball.”

Although the robot had not seen the game before, it picked up the little orange ball and filled it through the plastic net.

Google Gemini Robotics also makes robots more interactive and able to respond not only to changing verbal tasks but also to unpredictable conditions.

In another video, researchers asked the robot to put grapes in a bowl of bananas, but then they moved the bowl around while the robot arm adjusted and still managed to put the grapes in a bowl.

Look at

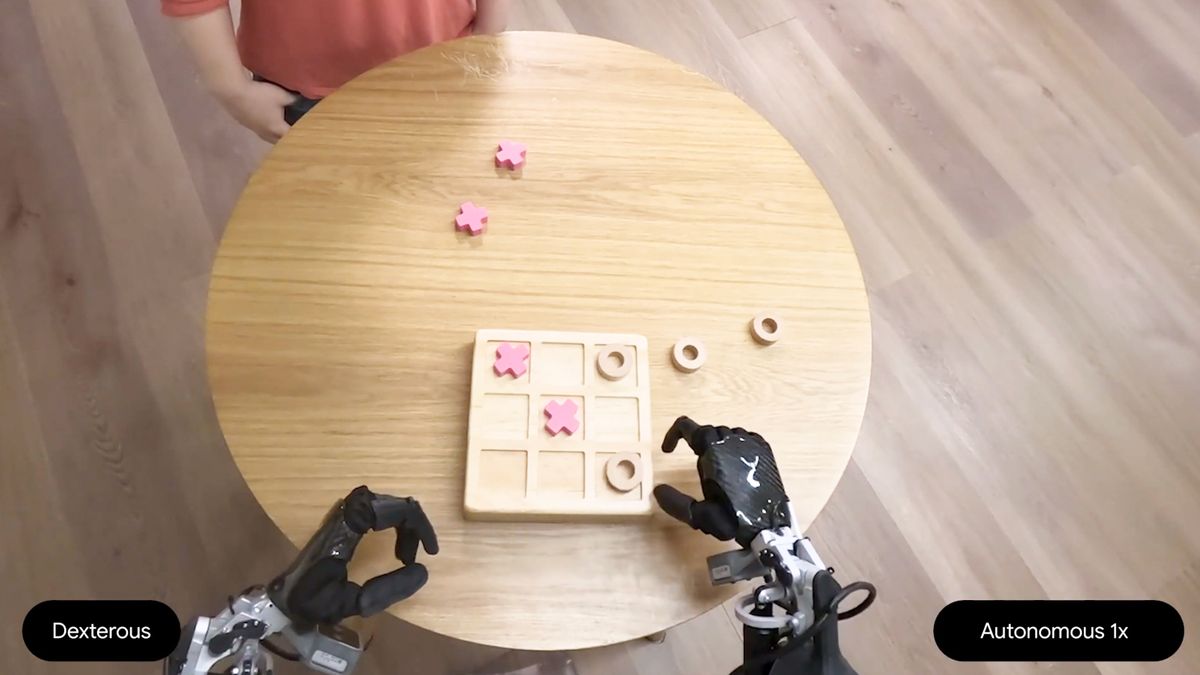

Google also demonstrated the robot’s dextrous skills, which lets the tackle thing like playing Tic-Tac-toe on a wooden plate, deleting a blackboard and folding paper in origami.

Instead of hours of training on each task, the robots respond to almost constant natural language instructions and perform the tasks without guidance. It’s impressive to look at.

Of course, it is not new to add AI to Robotik.

Last year, Openai collaborated with Figure AI to develop a humanoid robot that can find out tasks based on verbal instructions. As with Gemini robotics, Figure 01’s visual language model works with the Openai Tale Model to participate in back and forth conversations about tasks and changed priorities.

In the demo, the humanoid robot stands in front of the dishes and a drain. It is asked what it sees as it shows, but then the interlocutor changes tasks and asks for something to eat. Without missing out on a stroke, the robot picks up an apple and hand it over to him.

While most of what Google showed in the videos were separated robotic arms and hands that worked through a wide range of physical tasks, there are bigger plans. Google is working with apptronics to add the new model to its Apollo Humanoid Robot.

Google connects the dots with additional programming, a new advanced visual language model called Gemini Robotics (embodied reasoning).

The Gemini Robotics is will improve the spatial reasoning of the robotics and should help robotic developers connect the models to existing controllers.

Again, this should improve on the fly and allow the robots to quickly figure out how to understand and use unknown items. Google calls Gemini Rotbotics is an end-to-end solution and claims it “can perform all the steps needed to control a robot right out of the box, including perception, state stimulation, spatial understanding, planning and code generation.”

Google delivers Gemini Robotics -er model to several business and research-focused robotics companies including Boston Dynamics (manufacturers of Atlas), Agile Robots and Agility Robots.

All in all, it is a potential blessing for humanoid robotics developers. Since most of these robots are designed for factories or still in the laboratory, it may be some time before you have a gemini-enhanced robot in your home.