- Google Deepmind has improved and expanded access to its music AI -Sandbox

- The sandbox now includes the Lyria 2 model and real -time features to generate, expand and edit music

- The music is watermarked with Synthid

Google Deepmind has brought some new and improved sounds to its music AI sandbox, which, despite the fact that sand is notorious for musical instruments, is where Google hosts experimental tools to leave traces using AI models. The sandbox now offers the new Lyria 2 AI model and Lyria RealTime AI Musical Production Tools.

Google has beaten music Ai-Sandkassen as a way to trigger ideas, generate sound photos and maybe help you finally end the semi-written verse you’ve avoided watching throughout the year. The sandbox is mainly aimed at professional musical artists and producers, and access has been pretty limited since its debut in 2023. But Google is now opening the platform for many more people in music production, including those who want to create soundtracks for movies and games.

The new Lyria 2 AI music model is the rhythm section that underlies the new sandbox. The model is trained to produce high-faith audio outputs, with detailed and intricate compositions across any genre, from shoegaze to synth pop to regardless of weird LO-Fi Banjo-Core Hybrid, you cook in your bedroom studio.

The Lyria RealTime feature puts AI’s creation in a virtual study that you can clamp with. You can sit on your keyboard and Lyria RealTime helps you mix surrounding housebeats with classic funk, perform and finely pour its sound on the go.

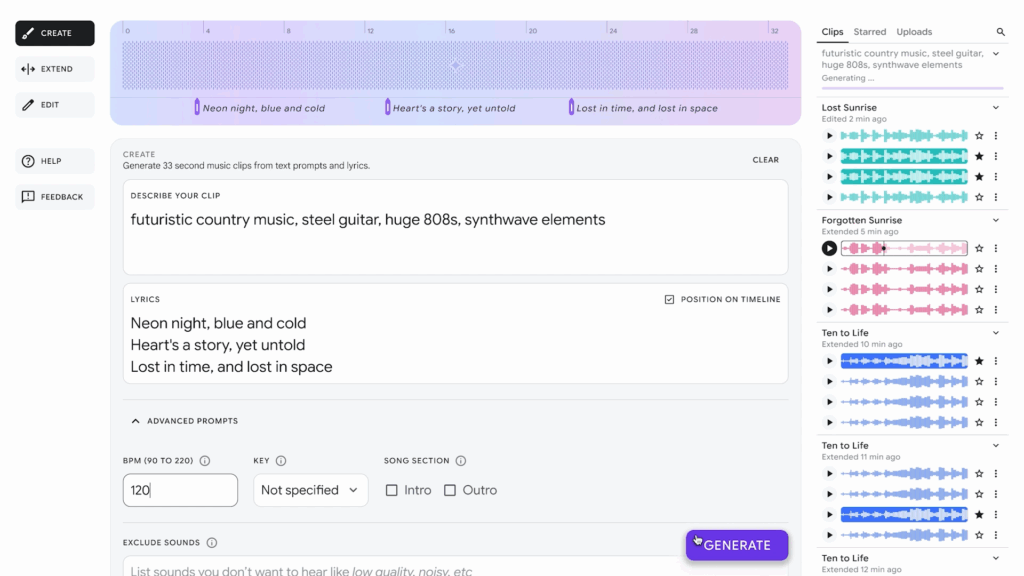

Virtual Music Studio

The sandbox offers three main tools for production of the tunes. Create, seen above, allows you to describe the kind of sound you are aiming for with words. Then AI whip up music samples you can use as Jumping-off points. If you’ve already got a rough idea down but can’t figure out what’s happening after the second choir, you can upload what you have and let the expansion function come up with ways to continue the play in the same style.

The third feature is called Edit, which, as the name suggests, re -records the music in a new style. You can ask for your tune to be reimbursed in another mood or genre, either by messing with the digital control board or via text prompts. For example, you can ask for something as basic as “transform this into a ballad” or something more complex as “makes this tricker, but still danceable”, or see how strange you can get by asking AI to “score this EDM fall as if it’s all just an OBO section.” You can hear an example below created by Isabella Kensington.

Look at

Ai Singalong

Everything generated by Lyria 2 and real time is watermarked using Google’s Visual Time Technology. This means that the AI-generated tracks can be identified, even if someone is trying to pass them on as the next Lost Frank Ocean demo. It is a smart move in an industry that is already ready for warmed debates about what counts as “real” music and what doesn’t.

These philosophical questions also determine the destination of a lot of money, so it’s more than just abstract discussions about how to define creativity at stake. But as with AI tools for the production of text, images and video, this is not the death knee for traditional songwriting. Nor is it a magic source for the next hit topping hit. AI could make a half -baked hum fall flat if it is poorly used. Fortunately, lots of musical talents understand what AI can do and what it can’t, as Sidecar Tommy demonstrates below.

Look at