- YouTube demonized two channels for sharing AI-made fake trailers

- Some Hollywood Studios secretly claimed ad revenue from the misleading trailers

- The crash comes in the midst of new contracts and laws limiting unauthorized AI -Replicas

If you’ve ever visited YouTube and clicked a trailer for the next superhero movie and thought it seemed too good to be true, yes, you could have been right. Wish thinking, smart editing and a scoop of AI Fakery produced clips that lured billions of clicks and earn lots of cash through advertising. The shocking part is that many of the money apparently found its way to the very studios you might expect to try to close such unauthorized use of their intellectual property, at least according to information recently uncovered Deadline.

This side-house can now be over with YouTube that removes two of the largest homes in these AI-lined fake trailers, screen culture and KH Studio, from its partner program. This does not mean any advertising revenue for them or the studies that allegedly get a piece of the action.

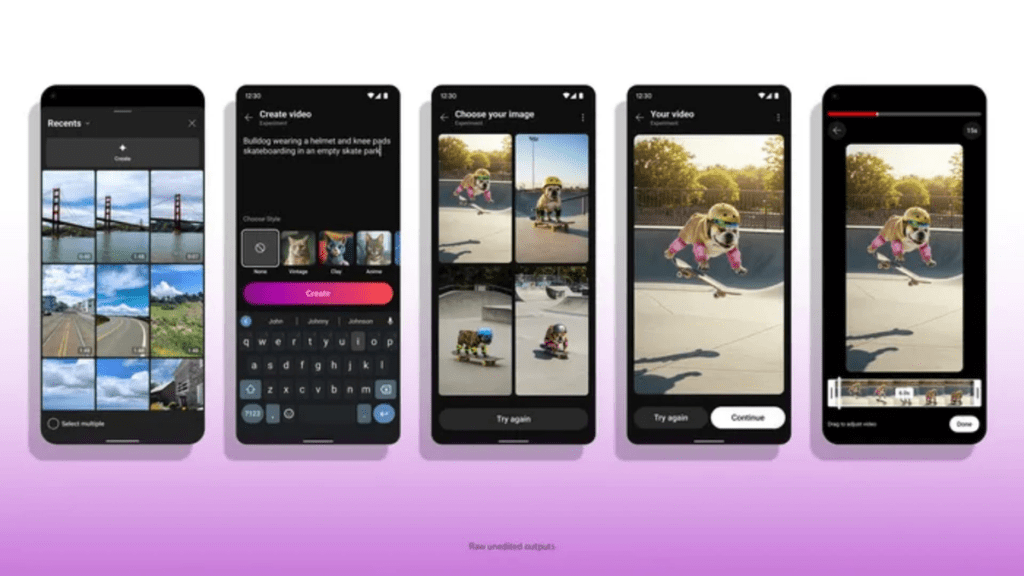

Screen culture has made many popular trailers full of AI-Generated Shots for upcoming films like The Fantastic Four: First Steps and Superman. KH Studio is more famous for its imaginary casting, like Leonardo DiCaprio in the next Octopus games Or Henry Cavill as the next James Bond. You would be forgiven to assume that plotlines, characters and visuals on screen teased details about the films, but they were produced far from real film development.

Fakes was good enough to sometimes get up in searches before the right trailers, and probably clicks could get YouTube’s recommendation algorithm to highlight the forgery over the right deal. That means a lot of cash for a monetized video. It is likely why, according to The deadline, Studios organized events with YouTube to redirect ad revenue from these fake trailers to their own accounts.

Trailer tricks

Still, YouTube has its own rules. The monetization agreement may have been okay in theory, but the channels broke other rules. To earn ad revenue, a creator can not just remix the content of others; They have to add original items. A reviewer may show a short clip of a movie to comment on it, but most of the video is the review, not the movie. You also can’t copy the work of others, mislead viewers, or create content for the “sole purpose of getting a view.”

Screen Culture and KH Studio can appeal the demonetization, but it can be a long shot. YouTube’s decision reflects a major ongoing debate about AI in the entertainment industry. The case-night strike highlighted the demands of actors for boundaries and control over AI replies of people in film and television. The final agreement concluded after the long strike that was exposed to new rules for the consent of an artist before any study can use AI to emulate their equality.

In the event that it was not clear enough, California’s legislators adopted two bills that prevented the use of AI to recreate an artist’s voice or image without their consent, even postal. It makes it harder for studios or junk creators to conjure up digital versions of famous faces just to juice a trailer, real or otherwise.

YouTube is somewhat firm as fan-made trailers have long been a popular kind of content. However, the use of AI can make a fake trailer seem good enough to fool people, even if they are only accidentally. And YouTube doesn’t want to encourage practice by making money from it. For the time being, the message from YouTube is clear: You can imagine that a world where Cavill is Bond or Galactus appears in Fantastic Four, but you can’t pay that imagination if only built around AI.