Anthropic just released a new model called Claude 3.7 Sonnet, and although I’m always interested in the latest AI capabilities, it was the new “expanded” state that really drew my eye. It reminded me how Openai first debuted his O1 model for Chatgpt. It offered a way to access O1 without leaving a window using the Chatgpt 4o model. You could write “/reason” and the AI chatbot would use O1 instead. It’s superfluous now, even if it still works on the app. Either way, the deeper, more structured reasoning that both made me want to see how they would do against each other.

Claude 3.7’s extended state is designed to be a hybrid reasoning tool, allowing users to switch between fast, conversation response and in-depth, step-by-step problem solving. It takes time to analyze your prompt before delivering its answer. It makes it good for math, coding and logic. You can even fine -tune the balance between speed and depth, giving it a time limit to think about its answer. Anthropic positions this as a way of making AI more useful for applications in the real world that require layered, methodological problem solving, as opposed to just answer to the surface level.

Access to Claude 3.7 requires a subscription to Claude Pro, so I decided to use the demonstration in the video below as my test instead. To challenge the expanded state of thinking, Anthropic AI asked to analyze and explain the popular, vintage probability puzzle known as the Monty Hall problem. It is a misleading difficult question that stumps many people, including those who consider themselves good at math.

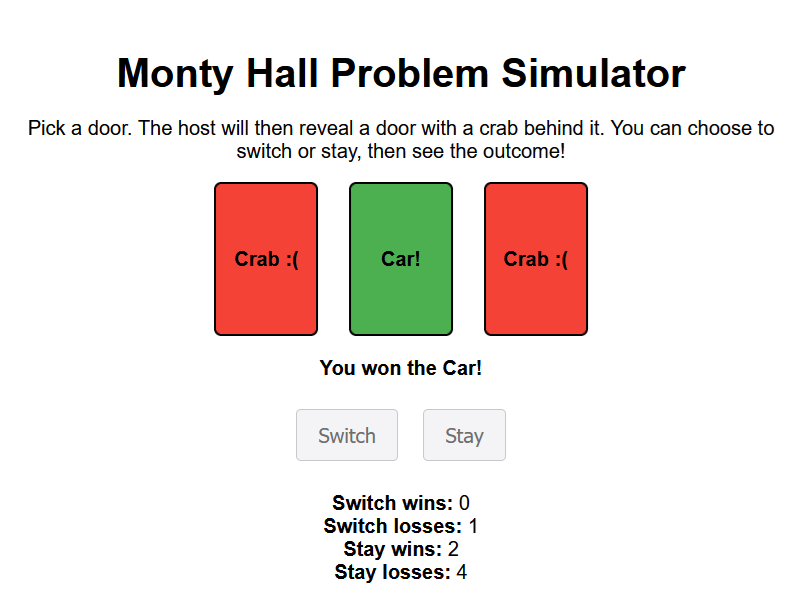

The setup is simple: You are on a game show and asked to choose one of three doors. Behind one is a car; Behind the others, goats. In the case of an whim, Anthropic decided to go with crabs instead of goats, but the principle is the same. Once you have made your choice, the host, who knows what is behind each door, opens, one of the remaining two to reveal a goat (or crab). Now you have a choice: Stick to your original choice or switch to the last unopened door. Most people assume that it doesn’t matter, but conflicting gives you change you a 2/3 chance of winning while sticking with your first choice leaves you with just 1/3 probability.

Crabby selection

Look at

With extended thinking enabled, Claude 3.7 took a measured, almost academic approach to explaining the problem. Instead of just indicating the correct answer, it carefully put the underlying logic in several steps, and emphasized why the probabilities change after the host reveals a crab. Nor did it explain in dry math. Claude ran through hypothetical scenarios and demonstrated how the probabilities played beyond repeated attempts, making it much easier to understand why shifts are always the better move. The response was not rushed; It felt like having a professor going through it in a slow, conscious way, ensuring that I really understood why the ordinary intuition was wrong.

Chatgpt O1 just offered a lot of a breakdown and explained the problem well. In fact, it explained it in several forms and styles. Along with the basic probability, it also went through game theory, the narrative views, the psychological experience and even an economic collapse. If something, it was a little overwhelming.

Gameplay

However, not all Claude’s extended thinking could do. As you can see in the video, Claude was even able to make a version of the Monty Hall problem for a game you could play right in the window. Trying the same fast with chatgpt O1 didn’t quite did the same. Instead, Chatgpt wrote an HTML script for a simulation of the problem that I could store and open in my browser. It worked as you can see below but took a few extra steps.

While there are almost certainly small differences in quality, depending on what kind of code or math you are working on, both Claude’s extended thinking and chatgpts are O1 model solid, analytical approaches to logical problems. I can see the benefit of adjusting the time and depth of reasoning that Claude offers. When it is said, unless you are really busy or require an exceptionally heavy analysis, Chatgpt does not take too much time and produce a great deal of content from its consideration.

The ability to make the problem as a simulation in the chat is much more remarkable. It makes Claude feel more flexible and powerful, although the actual simulation probably uses very similar code as HTML written by Chatgpt.