Over the past few years, Chatgpt has become a standard event for AI chatbots in the US and Europe despite lots of viable rivals fishing for a larger piece of the market. That’s part of what has caused the outbreak of China-based AI Chatbot Deepseek to feel so seismic.

Deepseeks rapid ascension has attracted tremendous attention and use, though not without controversy. The wide collection of user data for storage in Chinese servers is only a prominent example.

I decided to put these two AI tongue weights, Chatgpt and Deepseek, through their pace in combining their conversation skills with online searches, which is a particularly valuable arena.

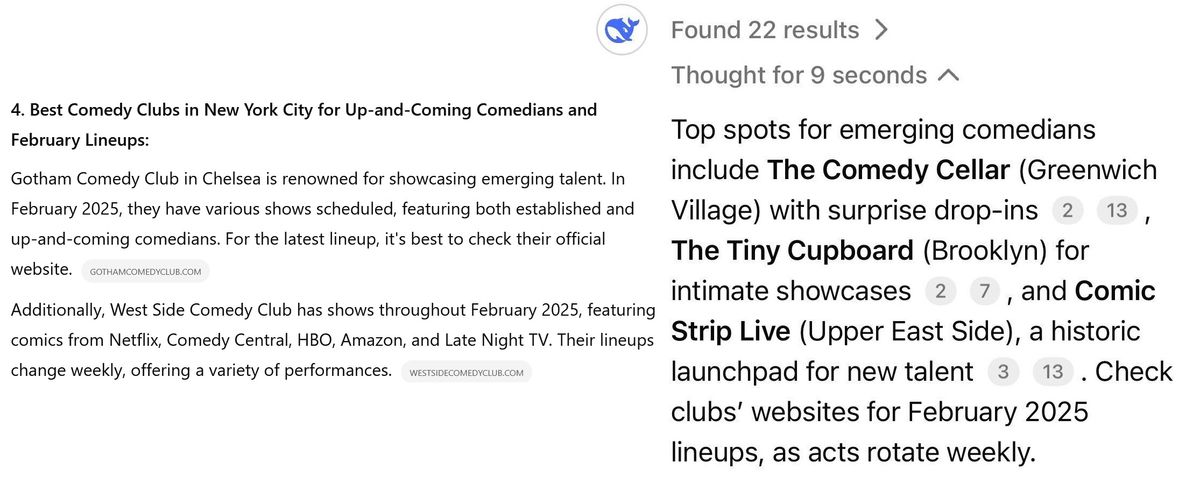

I devised four questions that cover everything from sports news and consumer advice to the best local spots to cocktails and comedy. I wanted to see how the AI assistants would work, so I mixed specificity with vagueness in the details. I used Deepseeks R1 and Chatgpt-4o models to answer the questions. While R1 can be compared to Openai’s newer O1 model for chatgpt, this model cannot see online after answers for now. You can see the questions and AI items below.

One at a time

I also immediately discovered that while Chatgpt was happy to answer several questions in a single prompt, Deepseek would only search for information on the first question and give up the later, no matter how I formulated the first prompt. Immediately it was a point against it. While the conversation approach to fast and response is fine in many cases, you sometimes have to ask a lot of questions to the chatbot or include multiple items that it may consider. You can see how Deepseek responded to an early attempt at several questions in a single prompt below.

Counting words

Even when they were divided into individual questions, the requests for Deepseek required some extra work in terms of defining the amount of information I wanted to receive. Depending on the kind of questions I sent, Deepseek would almost always give me too much information and it was often externally. Worse is, sometimes the very long answer would just be a filler, basically telling me to look things up on my own. Chatgpt is not immune to similar behavior, but it did not happen at all during this test.

And it wasn’t just my own preferences, the same self -control was clear when using chatgpt without logging in. I felt the need for the disability test with a 65-word limit to make it worth it at all. With all these limitations in place, here are the questions and the AI items. Chatgpt’s response is on the left and Deepseek’s response is on the right.

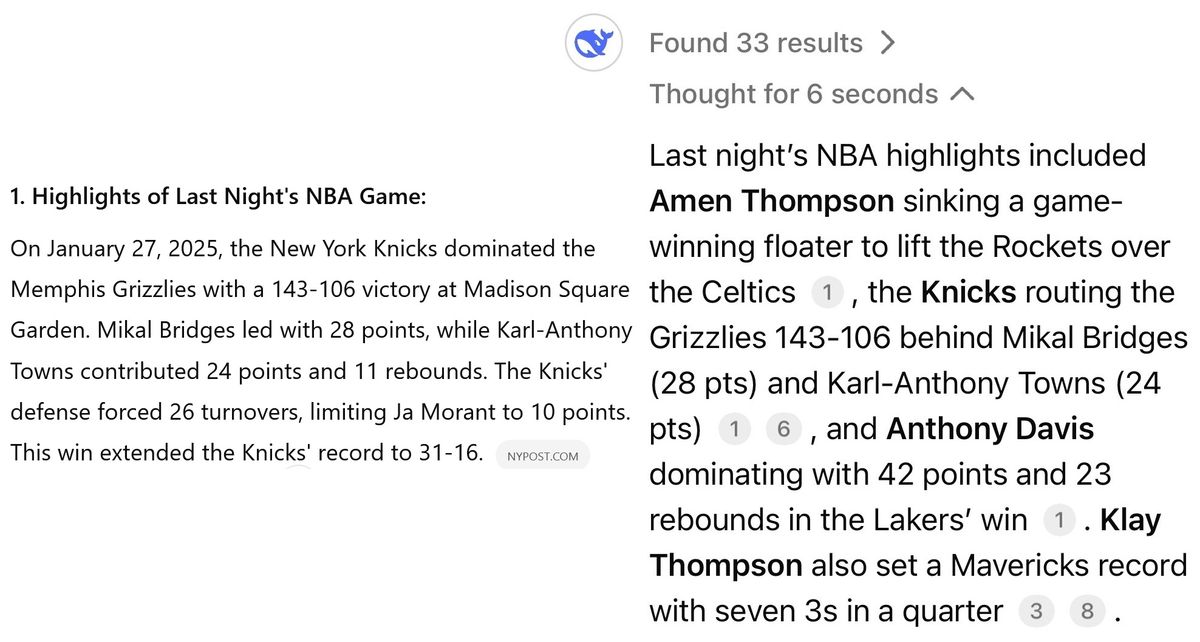

1. What were the highlights of yesterday’s NBA game and who won?

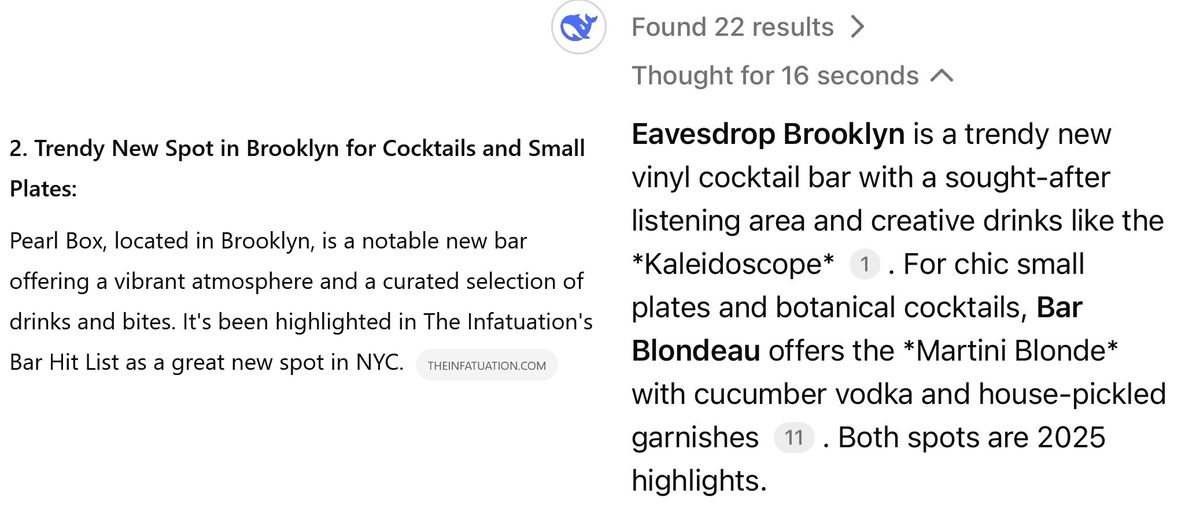

2. What is a trendy new place in Brooklyn for cocktails and small plates?

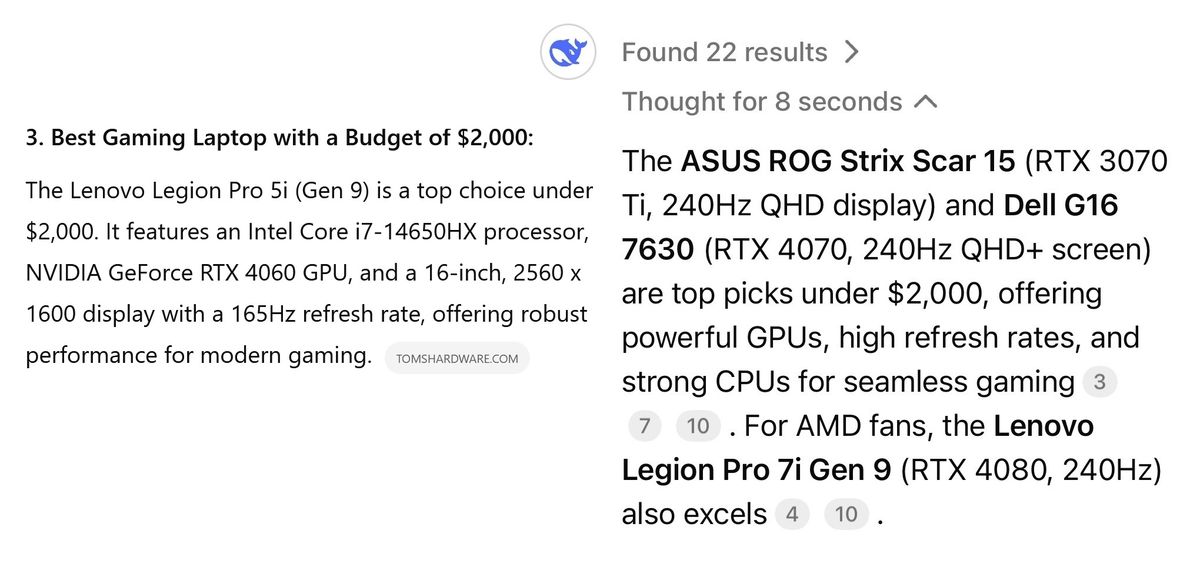

3. Which laptop is best for games with a budget of $ 2,000?

Deepseek is lost

With the warnings about what was needed to make the test possible, it is reasonable to say that both chatbots worked pretty well. Deepseek had some solid answers thanks to a far more thorough search effort that drew from more than 30 sources to each question. Especially the cocktail bar was large and AI was proactive enough to suggest a drink to get. The basketball response was also more extensive, although it was undoubtedly that Chatgpt’s decision to stay focused on a game indicated by the unique “game” of the question meant it was more aware.

It was in the answers to the Computer and Comedy Club recommendations that Deepseek showed its weaknesses. Both felt less like conversation answers and more like the topliners of their Google Resume. To be fair, Chatgpt wasn’t much better on these two answers, but the error felt less shiny, especially when watching all the parenthouses in Deepseeks computer response.

I understand why Deepseek has his fans. It’s free, good for picking up the latest info and a solid opportunity for users. I just feel like chatgpt cutting in the heart of what I ask, even when it’s not spelled. And while no technical company is a paragon of consumers’ privacy, Deepse’s terms and conditions somehow cause other AI -Chatbots to seem downright polite when it comes to the large amount of information you have to agree to agree to parts, down to the pace itself, where you write your questions. Deepseek sounds almost like a joke about how deep it is looking for information about you.

Plus, Chatgpt was just plain faster, whether I used Deepseeks R1 model or its less powerful siblings. And while this test was focused on search, I cannot ignore the many other limitations in Deepseek, such as a lack of sustained memory or image generator.

To me, Chatgpt the winner remains when choosing an AI chatbot to perform a search. Some of that may be simply the bias of confidentiality, but the fact that chatgpt gave me good for good answers from a single prompt is hard to resist as a killer function. This can be especially true when and when the O1 model and the upcoming O3 model get Internet access. Deepseek can find a lot of information, but if I was stuck with it I would be lost.