Alexandru Costin is Vice President, Generative AI and Sensei at Adobe.

No one came away from Firefly on this year’s Adobe Max London. Already added across the creative cloud -suite, the AI image and the video generator have been massively upgraded with new tools and features.

Prior to the events, we sat down with Alexandru Costin, Vice President, Generative AI and Sensei at Adobe, to explore what’s new with Firefly, which is why stories matter when using the best AI tools, and how professionals can use it to improve creativity everywhere.

- What can users expect from AI at Adobe Max?

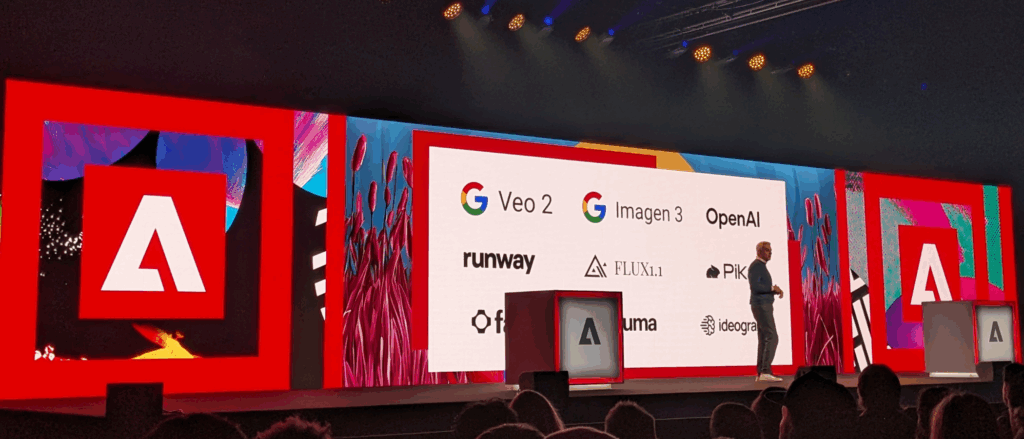

At Max we have the next generation of our image model, two versions of it. We have a vector model we have the video model. So many progress with the model from Adobe, commercially safe, high quality, amazing human reproduction. A lot of control and a good style engine, etc. We also introduce third -party model integrations.

Our customers told us that they want to live in our tools in our workflows. They still use other models for ideas or for different personalities. So we advertise Openais GPT image integration and Google’s image and VEO 2 in Firefly and Flux Integration into Firefly Boards.

The third major message is Firefly Boards is a new capacity of Firefly -Webplication. We look at it as an all-in-one platform for the next generation’s creative for ideas, create and produce production content. Firefly Boards is an endless canvas that enables team collaboration, real-time collaboration, commentary, but also Deep Gen AI has entered, in all these first-party and third-party models, new capabilities for remixing images.

- How easy is it to deliver something similar?

It’s not easy. We have worked on the project concept for similar, one year. In fact, underlying technology we have been working on for many years, as real-time collaboration with deep integration, with storage and innovation in gene AI-user experiences, remixing, auto-description images to create prompts for you. There is a lot of deep technology that went into it. It looks like magic and is very easy [to use]. We hope it’s so easy. Our goal is to build a complex layer. So for customers it’s like magic and everything just works.

- What is your favorite new feature?

My favorite feature is integration between image, video and the rest of the Adobe products. We try to build workflows where customers who have an intention in mind and they want to paint the image that is in their minds can use these tools in a truly connected way without having to jump through so many hangers to tell their story. Firefly Image 4 offers fantastic photoism, human reproduction quality, quick understanding. You are itering quickly.

With Image 4 Ultra, which is our Premium model, you can reproduce your image with additional details and we can take them into the Firefly video model as a key frame and create a video from this entire image. Then you can take that video into Adobe Express and make it as an animated banner, add text, add fonts. In Creative Sky we have a lot of opportunities that already exist. We bring Gen Ai inside these workflows, either in Firefly on the web or directly as an API integration.

But for me, I think the magic has all this available in an easy way. The Photoshop team is also working on an agent interface. They call it a new panel of action. You enter what you want. We have 1000 high -quality actions that we have curated for you. There are all these tools in Photoshop that are sometimes difficult to discover if you are not an expert, but we just want to bring them and apply them to you. I mean, you learn along the way but you don’t have to know everything until you start. Not only we help you reach your goal, we also teach you in and outs in Photoshop as we review this.

- It must be one of the biggest access barriers for many users

That’s it. It is to some extent too powerful. It has so many controls it can be scary, but with the new panel of action we will take a big part of that entry barrier away.

- How important is it to you when it comes to lowering these barriers to entry?

Everyone will benefit from this technology in different ways. For creative professionals, it will basically remove some of the tedium so they can focus on creativity. But with things like Firefly Boards, they will be able to work with teams and clients much better. The client can upload in boards some stylistic ideas, and then you can take it and integrate it very quickly into your professional workload.

For consumers, with people who want to spend seconds creating something, with Firefly, you just enter the prompt and we do it for you. It is a great capacity.

In the middle there are people who learn in their careers, hopeful creative professionals, next generation creative. And for them we want to give them both gene ai capacities, but also a bridge against the existing pixel-perfect tools that we have at Adobe. Because we think a mix of these two worlds is the best blend that the next generation creative must be armed.

- Where can further improvement in this area be made, making it more accessible?

For me, a great opportunity is a better understanding of people, such as quick understanding agent that has a creative partner to jump ideas out of. Another thing we advertise is [upcoming] Firefly Mobile App. This is a companion app that can use many of the Firefly App capacities, generate text, generate video, etc. But also because it is on mobile, you have access to the camera, you have a microphone that is many new options to make these interactions easier. So we look at it. We think the next generation creative is a big target market for us because we want to give them the tools for the trade.

- What is the motivation behind these new additions in Firefly?

For us, the customers are the reason we get up in the morning every day, they tell us what they need and they told us they want more quality, better people, more control, better stylization. That’s what’s behind the updates of the image model. We just want to make them more useful in more workflows for actual production use cases. Because our model is uniquely located to be safe for commercial use, we want customers to use it everywhere.

Video is also growing, and many of our customer base does not know how to use the video product. So it is another great accelerant for creativity to make video creation more accessible. We want to offer a larger population of people the tools to utilize video and be able to start reaching their goals there. While, of course, in products like Premiere Pro, we continue to integrate deeper, more advanced features, such as a few weeks ago at NAB, we launched generative expansion. It won one of the awards. Gen Extend is a 4K extension that allows professional videographers to basically expand clips so they don’t have to revive.

What motivates us is to help our clients tell stories, better stories, more different stories and succeed in their careers.

- When everyone uses AI, how does artists and businesses differ from rivals?

I think through human creativity and technique, how do they distinguish today? They all use Photoshop. They find ways to differentiate because, in fact, Gen AI is a tool designed, at least from an Adobe perspective, to serving the creative community, and we want to give them a more powerful tool to help them smooth their craft.

They describe it as going from the person editing to a creative director. All of our customers can become instructors of these gene AI tools to help them tell better stories, tell stories faster, etc. So we think the differentiation will still be in human creativity using the tool. And we see so much innovation. We see people using these technologies in ways we haven’t even thought of, which is always very exciting. Mixing them in new ways. Because that’s how you differentiate. And we think there will always be many ways to express some creativity.

We believe that creativity comes in different ways and there are different tools that creative people will use and mix together to tell better stories and change culture.