- Microsoft Finds Whisper Leak Shows Privacy Flaws in Encrypted AI Systems

- Encrypted AI chats can still leak clues about what users are discussing

- Attackers can track conversation topics using packet size and timing

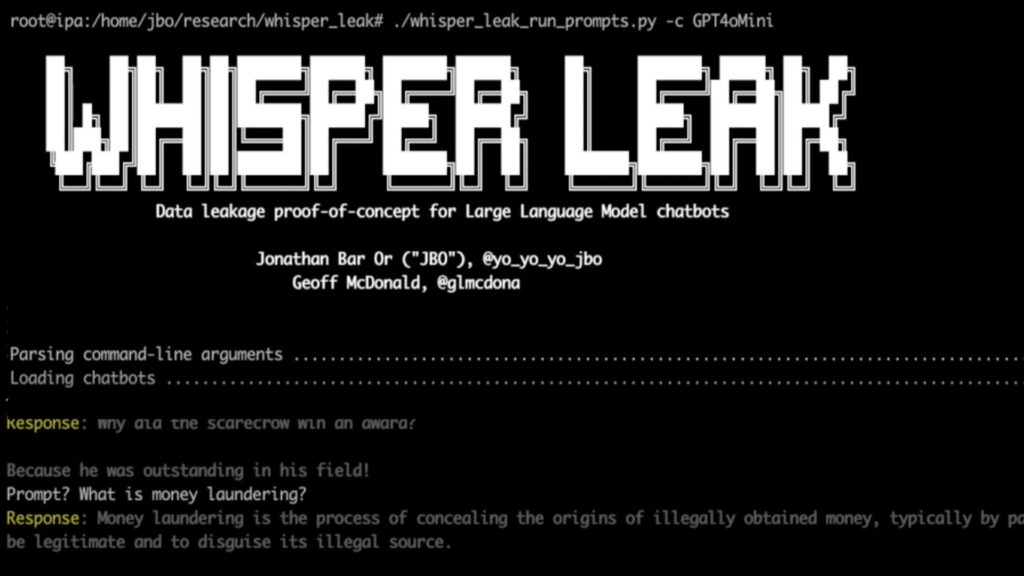

Microsoft has revealed a new type of cyberattack it has dubbed “Whisper Leak” that is capable of revealing the topics users discuss with AI chatbots, even when conversations are fully encrypted.

The company’s research suggests that attackers can study the size and timing of encrypted packets exchanged between a user and a large language model to infer what is being discussed.

“If a government agency or ISP monitored traffic to a popular AI chatbot, they could reliably identify users asking questions about specific sensitive topics,” Microsoft said.

Whisper Leak attack

This means that “encrypted” does not necessarily mean invisible – with the vulnerability being in how LLMs send responses.

These models do not wait for a complete response, but transmit data incrementally, creating small patterns that the attackers can analyze.

Over time, as they collect more samples, these patterns become clearer, allowing more accurate guesses about the nature of conversations.

This technique doesn’t decrypt messages directly, but reveals enough metadata to make educated guesses, which is probably just as worrying.

After Microsoft’s disclosure, OpenAI, Mistral and xAI all said they were moving quickly to implement remedies.

One solution adds a “random sequence of variable-length text” to each response, disrupting the consistency of token sizes that attackers rely on.

However, Microsoft advises users to avoid sensitive discussions on public Wi-Fi by using a VPN or sticking to non-streaming models of LLMs.

The results come alongside new tests showing that several open-weight LLMs remain vulnerable to manipulation, particularly during multi-turn calls.

Researchers from Cisco AI Defense even found models built by large companies struggle to maintain security controls when the dialogue becomes complex.

Some models, they said, showed “a systemic inability … to maintain guardrails across extended interactions.”

In 2024, reports surfaced that an AI chatbot leaked over 300,000 files containing personally identifiable information and hundreds of LLM servers were left exposed, raising questions about how secure AI chat platforms really are.

Traditional defenses, such as antivirus software or firewall protection, cannot detect or block side-channel leaks like the Whisper Leak, and these discoveries show that AI tools can inadvertently widen exposure to surveillance and data inference.

The best protection against identity theft for all budgets

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.