- AMD Ships Pollara 400 AI NIC to Open High-speed-I-Network

- Supports Ultra Ethernet standard with RDMA and RCCL for effective communication

- Future Vulcano 800G AI NIC Target

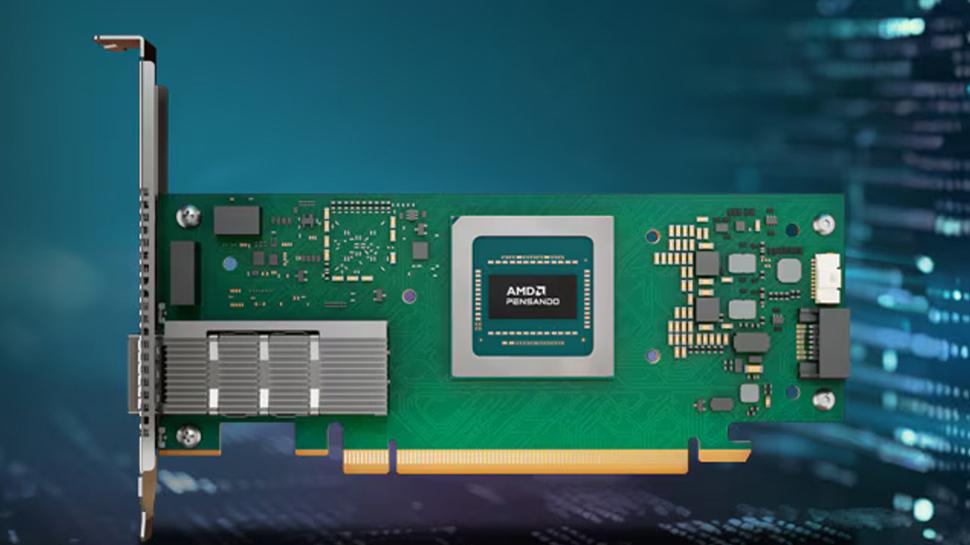

AMD has started sending Pensando Pollara 400 AI Network Card, part of the company’s push for open, high-speed data center network.

The map is designed for PCIe Gen5 systems and supports the Ultra Ethernet Consortium (UEC) standard aimed at transforming Ethernet to AI and HPC in scale.

The map offers RDMA support and is optimized for scale-out collective communication using RCCL, AMDS Alternative to NCCL.

Vulcano 800G AI NIC Targeting of EN 2026 -LACE

AMD says Pollara delivers about 10% better RDMA performance than Nvidias ConnectX-7 and approx. 20% better than Broadcoms Thor2. In GPU-heavy clusters, these gains help reduce inactive time and improve workload efficiency.

NIC uses a custom processor with support for flexible transport protocols, load balance and failover routing. It can redirect traffic during overload and maintain GPU connection during errors.

The card has a half -height, half -length design and supports the PCIe Gen5 X16, offering multiple port configurations including 1x400g, 2x200g and 4x100g. It supports up to 400 GBPs bandwidth and integrates monitoring tools to improve observability and reliability at the cluster level.

AMD claims that performance increase of up to 6X in large implementations, especially when scaled to hundreds of thousands of processors.

For individual workloads, the company reports up to 15% faster AI job performance and up to 10% improved network reliability through features such as fast failover, selective forwarding and overloading management.

With UEC specification 1.0, the company is now completed, the company is targeted at Hyperscalers. Oracle Cloud will be among the first to use the technology.

Looking at 2026, AMD says it intends to launch Pensando Vulcano 800G AI NIC to PCIe Gen6 systems (Pollara and Vulcano are the names of two volcanoes in Italy).

This NIC will support both Ultra Ethernet and Ualink to enable scale-out and upscaling network for large AI workloads. Vulcano is part of AMD’s Helios Rack-Scale Architecture set for 2026.

AMD places Vulcano as an open, multi-supplier alternative to Nvidia’s ConnectX-8. Its success can depend on how quickly the wider ecosystem can adapt and support the new network standards.

Write about the two network cards, Patrick Kennedy at Servethehome Observing, “At the end of the day, if you want to play in 2026 AI clusters, you need not only AI chips, but also the ability to scale up and scale out. AMD, which has a NIC, may sound a lot like Nvidia’s PlayBook because it is necessary. On the other hand, supporting open standards is very different from nvidia doing by littering to multi-wager.”