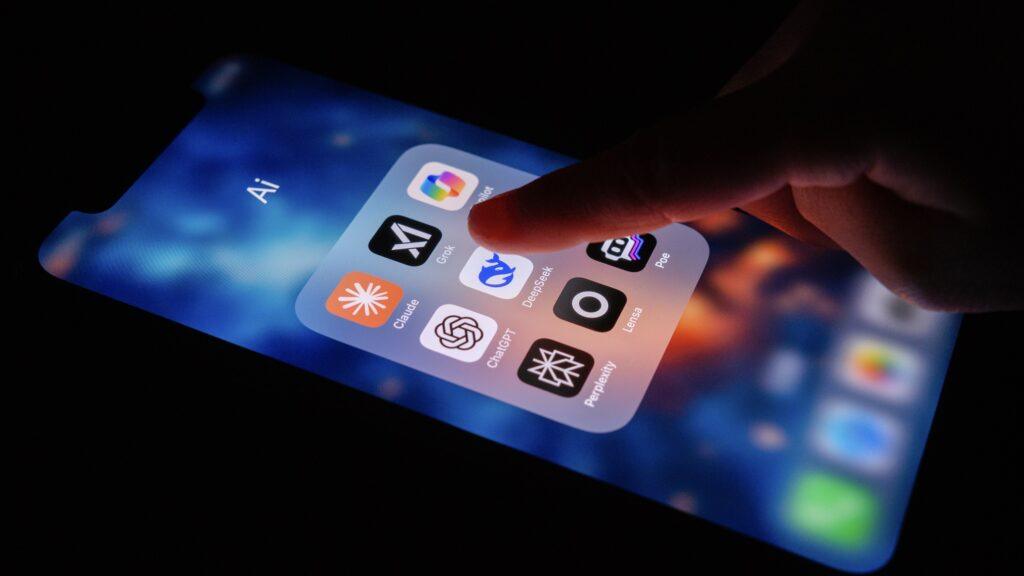

- A new bill is coming that may see AI models from ‘Contributing Nations’ forbidden

- Models like Deepseek are forbidden

- Many private companies have already banned the model

Artificial intelligence models built in China, Iran, Russia or the Democratic People’s Republic of Korea could soon be banned from use in government agencies thanks to the newly introduced ‘No Adversarial Ai Act’.

Legislators introduced this bill in both parliament and the Senate of Michigan Rep. John Moolenaar, a Republican and President of the Selected Committee for the Chinese Communist Party (CCP), and Democratic Rep. Raja Krishnamoorthi from Illinois, a ranking member of the committee, reports Cybergenws.

“We are in a new cold war – and AI is the strategic technology at the center. CCP is not innovating – it steals, scales and undermines,” argues the Mojenaar.

Deepseek Rivals

The Chinese Deepseek model rapidly rose to popularity as a rival to existing Western AI models -which cost a fraction of costs to do and achieve impressively similar results.

However, DeeSek, as with all AI models, comes with privacy, and legislators claim that this is putting data at risk, especially if these users enter information relevant to working in government organizations.

“From IP theft and chip smuggling to the embedding of AI in surveillance and military platforms, the Chinese Communist Party runs this technology. We need to draw a clear line: US Government Systems cannot be driven by tools built to serve authoritarian interests,” Moolaar said.

If this new bill goes, all state agencies will participate in the list of private companies and government departments that have also banned Deepseek, such as Microsoft, the US Ministry of Commerce and the US Navy.

The new bill requires that the US Federal Academy Safety Council create and maintain a publicly available list of AI models developed in the stated “contradictory nations” – and government agencies would not be able to use or buy any of these models without exemption from the US Congress – most likely in case of research or testing.