- The Raspberry Pi AI HAT+ 2 allows the Raspberry Pi 5 to run LLMs locally

- Hailo-10H accelerator delivers 40 TOPS INT4 inference power

- The PCIe interface enables high-bandwidth communication between the board and the Raspberry Pi 5

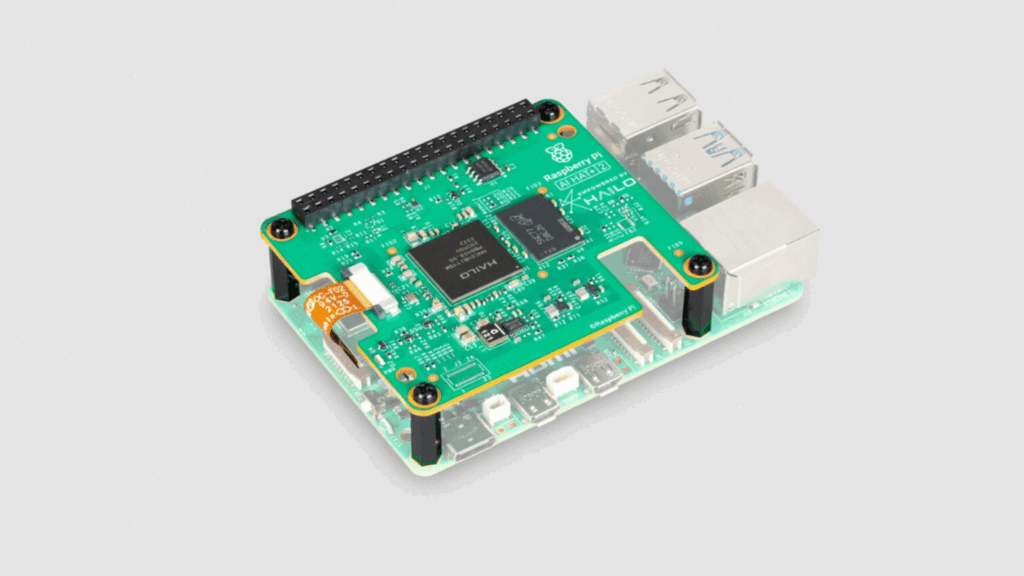

Raspberry Pi has expanded its edge computing ambitions with the release of the AI HAT+ 2, an add-on card designed to bring generative AI workloads to the Raspberry Pi 5.

Previous AI HAT hardware focused almost exclusively on computer vision acceleration, handling tasks such as object detection and scene segmentation.

The new board extends this scope by supporting large language models and vision language models that run locally without relying on cloud infrastructure or persistent network access.

Hardware changes that enable local language models

At the heart of the upgrade is the Hailo-10H neural network accelerator, which delivers 40TOPS of INT4 inference performance.

Unlike its predecessor, the AI HAT+ 2 has 8GB of dedicated on-board memory, allowing larger models to run without consuming system RAM on the host Raspberry Pi.

This change allows direct execution of LLMs and VLMs on the device and maintains low latency and local data, which is a key requirement for many edge deployments.

Using a standard Raspberry Pi distro, users can install supported models and access them through familiar interfaces such as browser-based chat tools.

The AI HAT+ 2 connects to the Raspberry Pi 5 through the GPIO header and relies on the system’s PCIe interface for data transfer, which precludes compatibility with the Raspberry Pi 4.

This connection supports high-bandwidth data transfer between the accelerator and the host, which is essential for moving model input, output, and camera data efficiently.

Demonstrations include text-based question answering with Qwen2, code generation using Qwen2.5-Coder, basic translation tasks, and visual scene descriptions from live camera feeds.

These workloads rely on AI tools packaged to run within the Pi software stack, including containerized backends and local inference servers.

All processing takes place on the device without external computing resources.

The supported models range from one to one and a half billion parameters, which is modest compared to cloud-based systems operating at much larger scales.

These smaller LLMs target limited memory and power envelopes rather than broad general-purpose knowledge.

To address this limitation, AI HAT+ 2 supports fine-tuning methods such as Low-Rank Adaptation, which allows developers to adapt models for narrow tasks while keeping most parameters unchanged.

Vision models can also be retrained using application-specific datasets through Hailo’s toolchain.

The AI HAT+ 2 is available for $130, placing it above previous vision-focused accessories while offering similar computer vision throughput.

For workloads centered solely on imaging, the upgrade offers limited gains, as its appeal rests heavily on local LLM execution and privacy-sensitive applications.

In practical terms, the hardware shows that generative AI on Raspberry Pi hardware is now possible, although limited memory height and small model sizes remain an issue.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.