- Google Deepmind just revealed Genie 3, its latest world model

- Unlike Genie 2, this model allows for real -time interaction and delivers it all in 720p

- This means you can generate an environment, explore it and change it on the go

Google’s AI world model has just received a significant upgrade as the technology giant, specifically Google Deepmind, introduces Genie 3. This is the latest AI world model, and it kicks things into the saying high gear by letting the user generate a 3D world at 720p quality, explore it and feed it new prompt to interact or change the environment.

It’s really nice and I highly recommend that you watch the message video from Deepmind, which is embedded below. Genie 3 is also very different from, for example, the still impressive VEO 3, as it offers video with audio that goes far beyond the 8-second limit. Genie 3 offers several minutes of what Google calls the ‘interaction horizon’, so you can interact with the environment in real time and make adjustments as needed.

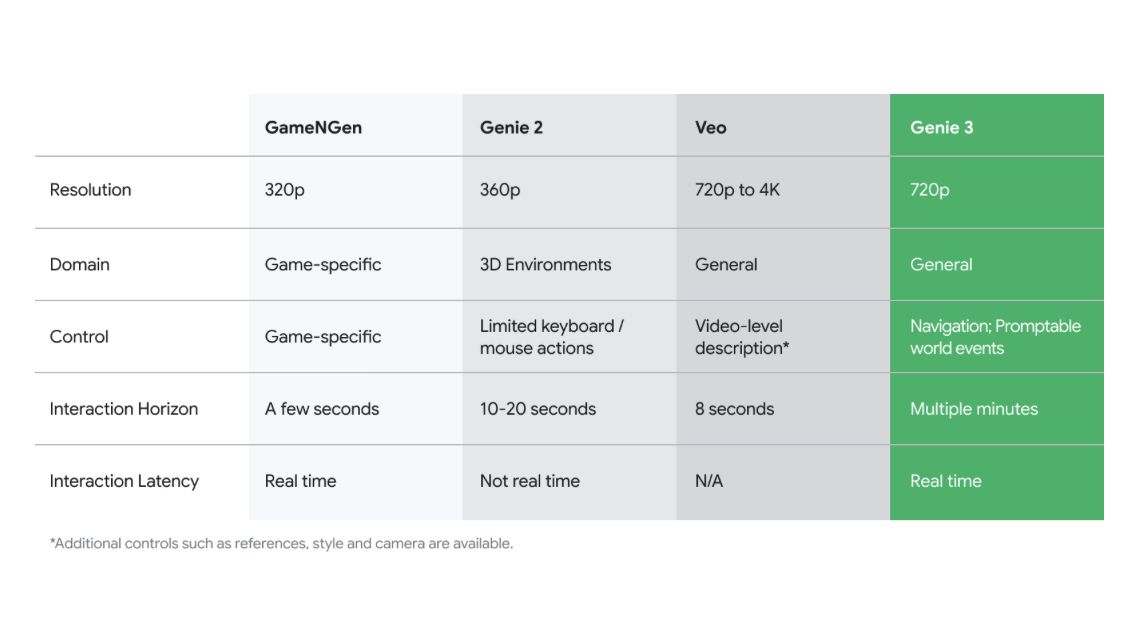

It’s kind of if AI and VR merged; It allows you to build a world from a quick, add new objects and explore it all. Genie 3 seems to be an improvement over Genie 2 introduced by the end of 2024. In a chart shared within Google’s Deepmind post, you can see the progression from Gamengen to Genie 2 to Genie 3 and even a comparison with VEO.

Googles also shared a number of demos, including a few that you can try in the blog post, and it gives us choice-your-adventure vibes. There are a few different scenes you can try on a snow -covered tray or even a goal you want AI to achieve within a museum environment.

Look at

Google summarizes it as “Genie 3 is our first world model to allow real -time interaction while improving consistency and realism compared to Genie 2.” And while my mind and my colleague Lance Ulanoffs went to interact in this environment in a VR headset to explore a new place or even as a great blessing for game developers to test environments and maybe even characters, Google considers this – no surprise – a step towards Agi. It is artificial general intelligence, and the view here from Deepmind is that it can train different AI agents in an unlimited number of deeply immersive environments in Genie 3.

Another important improvement with Genie 3 is its ability to continue objects in the world – for example, we observed a set of arms and hands using a paint roll to apply blue paint to a wall. In the clip we saw a few wide stripes of rolled blue paint on the wall, then turned away and then back to see the paint marks still on the right spots.

It’s neat, and looks like some of the objects that Apple is set to achieve with Visiono’s 26-self, it’s overlooking on your real environment, so maybe not so impressive.

Deepmind establishes the limitations of Genie 3 and notes that the world model in its current version cannot “simulate locations in the real world with perfect geographical accuracy” and that it only supports a few minutes of interaction. Genie 3’s minutes capacity is still a significant leap over Genie 2, but it does not allow hours of use.

You also can’t jump into the world of Genie 3 right now. It’s available for a small set of testers. Google notes that it hopes to make Genie 3 available to other testers, but that is to find out the best way to do it. It is unclear how the interface to interact with Genie 3 looks at this time, but from the shared demos it is pretty clear that this is a compelling tech.

Whether Google limits the use of AI research and education, or exploring to generate media, I have no doubt that we will see Genie 4 here in short order … or at least an extension of Genie 3. For now I will return to play with VEO 3.