There does not deny that Apple’s Siri Digital Chatbot did not exactly hold an honorary place on this year’s WWDC 2025 Keynote. Apple mentioned it and repeated that it took longer than it expected to bring all of Siri, it promised a year ago, and said the full Apple integration would arrive “in the coming year.”

Apple has since confirmed that this means 2026. This means we do not see the kind of deep integration that would have let Siri use what it knew about you and your iOS-running iPhone to become a better digital companion in 2025. It won’t, as part of the equal iOS 26, use app purposes to understand what’s happening on screen and take action on your vehal.

I have my theories about the reason for the delay, most of which are about the tension between delivering a rich AI experience and Apple’s core principles of privacy. They often look to across purposes. However, this is guesswork. Only Apple can tell us exactly what’s going on – and now they have it.

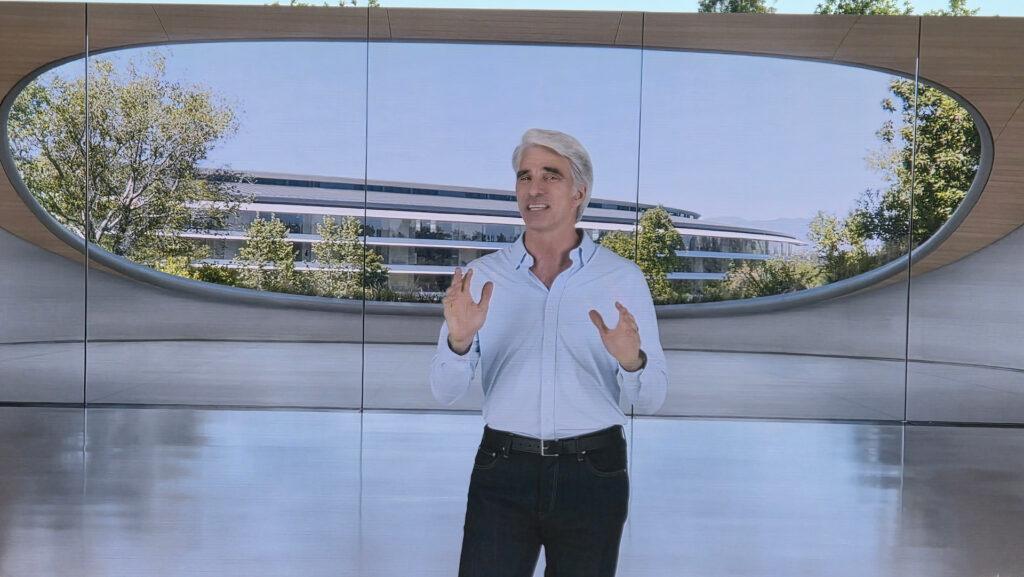

I, together with Tom’s Global Editor-in-Chief Mark Spoonauer, set me short with Apple’s Senior Vice President of Software Engineering Craig Federighi and Apple Global VP for Marketing Greg Joswiak for a broad podcast discussion on practically all that Apple revealed over the 90-minute main note.

We started by asking FEDERIGHI what Apple provided about Apple Intelligence, as well as the status of Siri, and what iPhone users could expect this year or next. Federighi was surprisingly transparent and offers a window into Apple’s strategic thinking when it comes to Apple Intelligence, Siri and AI.

Far from nothing

FEDERIGHI started by going through all that Apple has delivered with Apple Intelligence so far and to be fair is a significant amount

“We were very focused on creating a broad platform for really integrated personal experiences in us.” Remembered Federighi, referring to the original Apple Intelligence message on WWDC 2024.

At that time, Apple demonstrated writing tools, summaries, messages, movie memories, semantic search of the photo library and cleaning up photos. It provided on all these features, but even when Apple built these tools, recognized it, Federighi told us that “We could on this foundation of large language models on the device, private cloud calculation as a foundation for even more intelligence, [and] Semantic indexing on the device to gain knowledge, build a better Siri. “

Overcilment?

A year ago, Apple’s confidence in its ability to build such a Siri led it to demonstrate a platform that could handle more conversation context, wrong, write to Siri and a distinctly redesigned user interface. Again, all things provided that Apple provided.

“We talked about too […] Things like being able to invoke a wider range of actions across your device by app intentions that were orchestrated by Siri to let it do more things, “FEDERIGHI added.” We also talked about the ability to use personal knowledge from the semantic index, so if you ask for things like, “what is the podcast that ‘Joz’ sent me? ‘That we could find it, whether in your messages or in your e -mail, and call it out, and maybe even act on it using these app purposes.

This is known history. The Apple Overproofites and subcontracted and fails to deliver a guard promised exit of the year Apple Intelligence Siri update in 2024 and admits in the spring of 2025 that it would not be ready soon. As for why it happened, it has been a bit of a mystery so far. Apple is not in the habit of demonstrating technology or products that it does not know for sure that it will be able to deliver according to plan.

However, Federighi explained in detail where things went wrong and how Apple progresses from here.

“We found that when we developed this feature that we really had two phases, two versions of the ultimate architecture we were going to create,” he explained. “Version one we had worked here at the time we got close to the conference and at that time had great confidence that we could deliver it. We were thinking in December, and if not, we were expecting spring until we announced it as part of WWDC. Because we knew the world would have a really complete picture of, ‘What does Apple think about the implications of Apple Intelligence and where should it go?’

A tale of two architectures

When Apple worked on a V1 of the Siri architecture, it also worked on what Federighi called V2, “a deeper end-to-end architecture that we eventually knew what we would create to get to a complete set of capabilities we wanted for Siri.”

What everyone saw during WWDC 2024 was videos of the V1 architecture, and it was the basis of work that began in earnest after WWDC 2024 revealed in preparation for the full Apple Intelligence Siri launch.

“We are putting around for months, making it work better and better across multiple app purposes, better and better to search,” Federighi added. “But basically we found that the restrictions in the V1 architecture did not get us to the quality level we knew our customers needed and expected. We realized that V1 architecture, you know we could push and push and push and spend more time on, but if we tried to push out the state, it would not be in, had to move to the V2 architecture.

“As soon as we realized it, and it was during the spring, we let the world know that we wouldn’t be able to postpone it, and we would continue to work on really switching to the new architecture and releasing something.”

We realized it […] If we tried to push it out into the state it should be in, it would not meet our customer expectations or Apple standards and that we had to move to the V2 architecture.

Craig Federighi, Apple

This switch, however, and what Apple learned along the way meant that Apple would not make the same mistake again and promise a new Siri to a date that it could not guarantee to hit. Instead. Apple will not “comply with a date”, FEDERIGHI explained “until we have internal, the V2 architecture does not only deliver in a form that we can demonstrate for you all …”

Then he joked that although he actually “could” demonstrate a functioning V2 model, he wouldn’t do it. Then he added more seriously, “We have, you know, the V2 architecture, of course, working internally, but we are not yet at the point where it delivers at the quality level that I think makes it a good Apple feature, and therefore we do not advertise it.”

I asked Federighi if he spoke at V2 architecture of a wholesale rebuilding of Siri, but Federighi separates me from that view.

“I have to say that the V2 architecture is not, it was not a star-over. End-to-of-of-the-part is that we are much higher giving us much higher quality and much better.

Another AI strategy

Some may see Apple’s failure to supply the full Siri on its original schedule as a strategic tripping. But Apple’s approach to AI and product is also completely different from Openai or Google Gemini. This is not about a unique product or a strong chatbot. Siri is not necessarily the center we all imagined.

FEDERIGHI does not dispute that “AI is this transformation technology […] Everything that is growing out of this architecture will have decades-long influence across the industry and the economy, and like the Internet, like mobility, and it will touch Apple’s products and it will touch experiences that are well outside Apple products. “

Apple will definitely be part of this revolution, but on its terms and in ways that are most beneficial to its users, while of course they protect their privacy. However, Siri was never the playoffs, as Federighi explained.

AI is this transformation technology […] And it’s going to touch Apple’s products and it’s going to touch experiences that are well outside Apple products. “

Craig Federighi, Apple

“When we started with Apple Intelligence, we were very clear: This wasn’t about just building a chatbot. So apparently when some of these Siri capabilities I mentioned didn’t show up were people like, ‘What happened, Apple? I thought you would give us your chatbot. It was never the goal and it’s not our primary goal.’

So what is the target? I think it can be pretty obvious from the WWDC 2025 Keynote. Apple intends to integrate Apple Intelligence across all its platforms. Instead of switching to a unique app like chatgpt to your AI needs, Apple puts it in a way everywhere. It’s done, Federighi explains, “In a way that meets you where you are, not that you go to a chat experience to get things done.”

Apple understands the lured of conversation bots. “I know a lot of people think it’s a truly powerful way of gathering their thoughts, brainstorm […] So sure this is good things, “says Federighi.” Are they the most important thing for Apple to develop? Well, time will show where we go there, but that’s not the most important thing we intend to do at this time. “

Soon come back for a link to Techradar and Tom’s Guide Podcast with the full interview with Federighi and Joswiak.