- The GSI Gemini-I APU reduces constant data shuffling between the processor and memory systems

- Completes retrieval tasks up to 80% faster than comparable CPUs

- The GSI Gemini-II APU will deliver ten times higher throughput

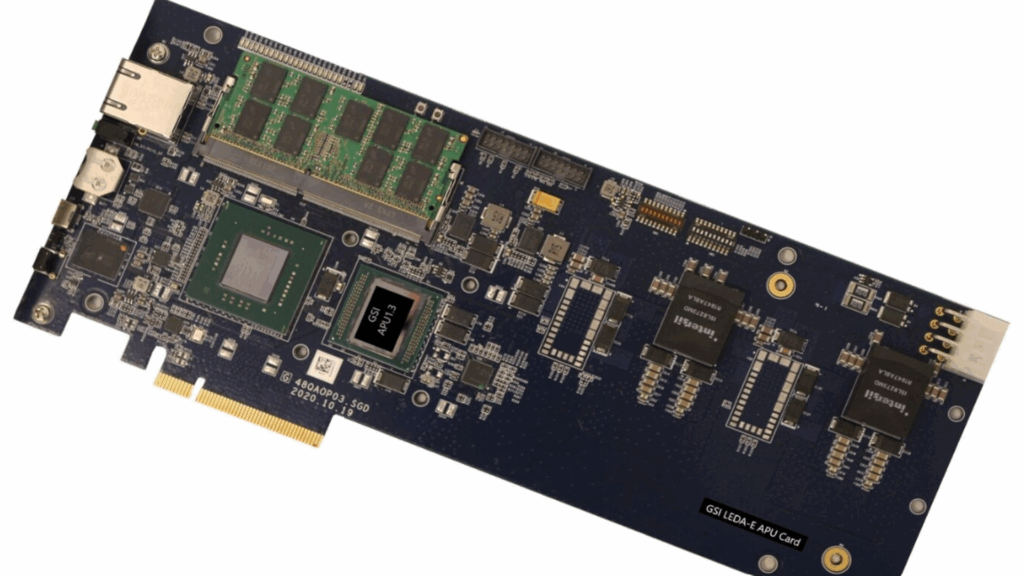

GSI Technology promotes a new approach to artificial intelligence processing that places computation directly in memory.

A new study from Cornell University draws attention to this design, known as the associative processing unit (APU).

It aims to overcome long-standing performance and efficiency limits, suggesting it could challenge the dominance of the best GPUs currently used in AI tools and data centers.

A new challenger in AI hardware

Published in the ACM journal and presented at the recent Micro ’25 conference, the Cornell research evaluated GSI’s Gemini-I APU against leading CPUs and GPUs, including Nvidia’s A6000, using Retrieval Augmented Generation (RAG) workloads.

The tests spanned datasets from 10 to 200 GB, representing realistic AI inference conditions.

By performing calculations within static RAM, the APU reduces the constant data shuffling between the processor and memory.

This is a key source of energy loss and latency in conventional GPU architectures.

The results showed that the APU could achieve GPU-class throughput while using far less power.

GSI reported that its APU used up to 98% less energy than a standard GPU and performed retrieval tasks up to 80% faster than comparable CPUs.

Such efficiency could make it appealing for edge devices such as drones, IoT systems and robotics, as well as for defense and aerospace applications where power and cooling limits are tight.

Despite these results, it remains unclear whether compute-in-memory technology can scale to the same level of maturity and support enjoyed by top GPU platforms.

GPUs currently benefit from well-developed software ecosystems that allow seamless integration with major AI tools.

For compute-in-memory devices, optimization and programming are still emerging areas that may slow wider adoption, especially in large data center operations.

GSI Technology says it continues to refine its hardware, with the Gemini-II generation expected to deliver ten times higher throughput and lower latency.

Another design, called Plato, is under development to further extend computing performance for embedded edge systems.

“Cornell’s independent validation confirms what we have long believed, compute-in-memory has the potential to disrupt the $100 billion AI inference market,” said Lee-Lean Shu, chairman and CEO of GSI Technology.

“The APU delivers GPU-class performance at a fraction of the energy cost, thanks to its highly efficient memory-centric architecture. Our recently released second-generation APU silicon, Gemini-II, can deliver around 10x faster throughput and even lower latency for memory-intensive AI workloads.”

Via TechPowerUp

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews and opinions in your feeds. Be sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, video unboxings, and get regular updates from us on WhatsApp also.