- Pixel 9 smartphones now have access to Gemini Live Astra capacities

- Astra can answer questions related to what you see or what is on your device’s screen

- The powerful AI tool is free and it arrived on Samsung S25 units yesterday

Google Pixel 9, 9 Pro and 9A owners just got a huge free Gemini upgrade that adds impressive Astra capacities to their smartphones.

As we reported yesterday (April 7), Gemini Visual AI capabilities have begun to roll off the Samsung S25 devices, and now the Pixel 9S also gets the great features.

So what is Gemini Astra? Well, you can now start Gemini Live and give it access to your camera, and it can then chat about what you see and what’s on your smartphone’s screen.

Gemini Astra has been hinted at for a long time and it is extremely exciting to access it via a free update.

You need to see the opportunity to access Geminis Astra capacities from Gemini Live -Interface. If you do not have access yet, you must be patient as it must be available to all Pixel 9 users in the coming days.

While I personally do not have access to a Google Pixel 9 to test Gemini Live’s Astra skill, my colleague and Techradar’s senior AI editor, Graham Barlow, is doing.

I asked him to test Gemini Astra and give me his first impressions of the new Pixel 9 AI capabilities and you can see what he was doing from it below.

Look at

Hands-on impression with Pixel 9’s new Gemini Astra capacities

Graham Barlow

Tense over the news of this free update I decided to try the new Astra capacities on my Google Pixel 9.

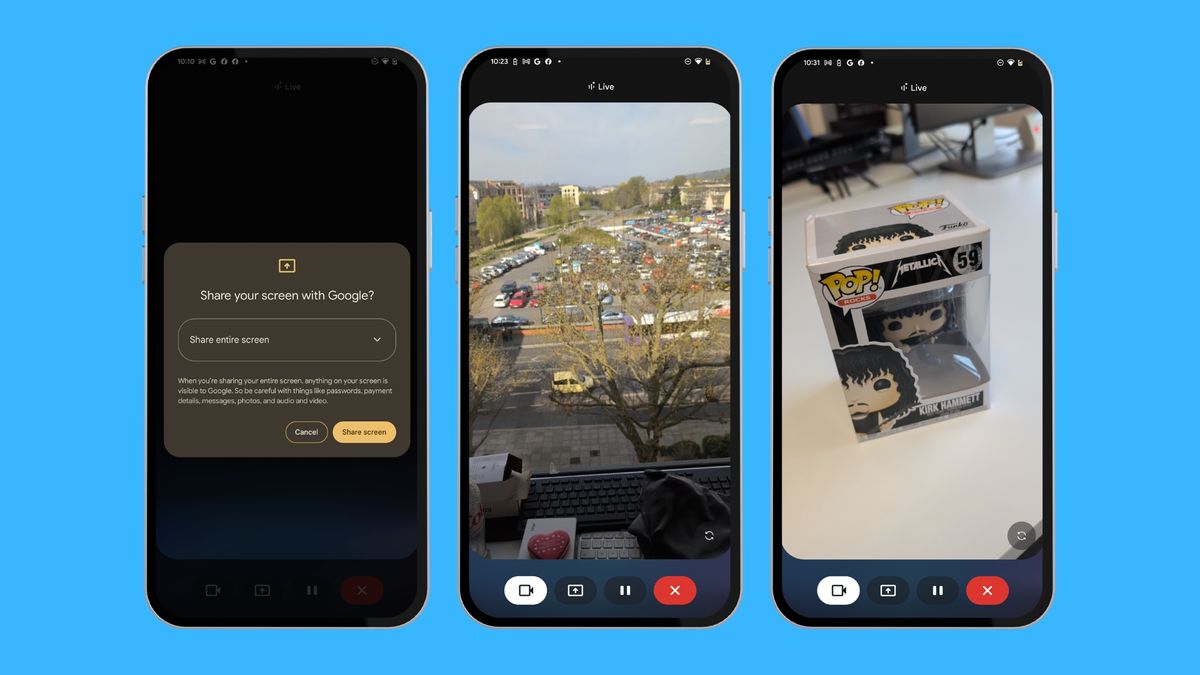

When you’re in Gemini Live, you’ll notice two new icons at the bottom of the screen – a camera icon and a screen mandelicon.

Tap the camera icon and Gemini switches to a camera mode showing you video of what your phone is watching but Gemini Live icons remain at the bottom of the screen.

There is also a camera button button so you can get Gemini to look directly at you. I knocked on it and asked Gemini what it thought about my hair as it replied that my hair was “a nice natural brown color”. Gee, Thanks Gemini!

I tested Gemini Live with a few items on my desk – a water bottle, a magazine and a laptop, like it all identified correctly and could tell me about. I pointed the phone to the window at a rather undescribed parking space and asked Gemini which city I was in and it immediately and correctly told me it was Bath, UK because architecture was quite characteristic and there were a lot of green surroundings.

Gemini can’t use Google search as you go live, so so far it’s good for brainstorming, chatting, coming up with ideas or simply identifying what you’re looking at.

For example, it could chat with me about Metallica and successfully identified Kirk Hammett Funko Pop I got on my desk, but it couldn’t go online and find out how much it would cost to buy.

The icon of the screen almond comes with a message that asks you to share the screen with Google, so when you say “Share Screens”, it puts a small Gemini window at the top of the screen that looks like the phone call window you get when you start using your phone while on a call.

When you start interacting with your phone, the window minimizes even further into a small red time counter that counts how long you have been live in.

You can continue using your phone and talk to Gemini at the same time so you can ask it, “What do I look at?” And it will describe what’s on your phone screen, or “Where are my Bluetooth settings?” And it will tell you which parts of the setting app you should look in.

It’s pretty impressive. One thing it can’t do is, though, is interacting with your phone in any way, so if you ask it to take you to the Bluetooth settings, it can’t do it, but it will tell you what to press to get you there.

In general, I am impressed with how well Gemini Live works in both of these new modes. We’ve had features like Google Lens that can use your camera like this for a while now, but having it all inside the Gemini app is far more convenient. It’s fast, it’s bug-free and it just works.