- Anthropics Claude Chatbot now has an on-demand memory feature

- AI only remembers previous chats when a user specifically asks

- The feature is first rolled out to Max, Team and Enterprise subscribers before expanding to other plans

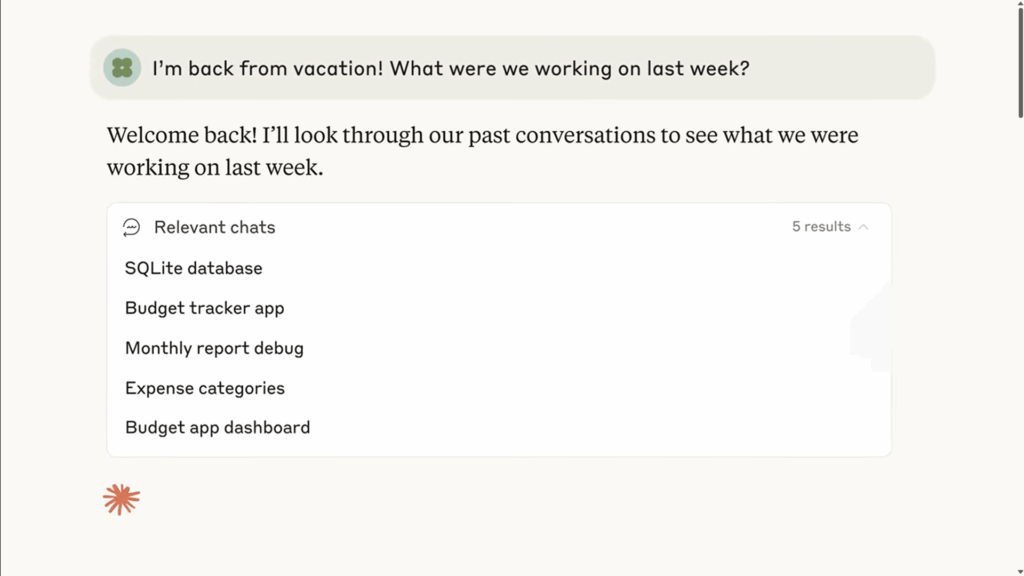

Anthropic has given Claude a memory upgrade, but it will only activate when choosing. The new feature allows Claude to remember previous conversations and give AI Chatbot Information to help continue previous projects and apply what you have discussed before for your next conversation.

The update comes first to Claude’s Max, Team and Enterprise subscribers, although it is likely to be more accessible at some point. If you have, you can ask Claude to search for previous messages tied to your work area or project.

But unless you explicitly ask, Claude will not throw an eye back. This means that Claude will maintain a generic kind of personality by default. It is for the sake of privacy, according to anthropic. Claude can remember your discussions if you want, without creeping into your dialogue uninvited.

In comparison, Openais Chatgpt automatically hides past chats unless you opt out of them and use them to shape its future response. Google Gemini goes even further and employs both your conversations with AI and your search history and Google account data, at least if you let them. Claude’s approach does not pick up the bread crumbs that refer to previous conversations without you asking it to do so.

Look at

Claude remembers

Adding memory may not seem like a big deal. Still, you will feel the effect right away if you have ever tried to restart a project interrupted by days or weeks without a useful assistant, digital or otherwise. Making it into an opt-in choice is a nice touch in accommodating how comfortable people are with AI at the moment.

Many may want AI help without handing over control to chatbots that will never forget. Claude side by the tension clean by making the memory of something you consciously endorse.

But it’s not magic. Since Claude does not retain a personal profile, it does not proactively remind you of preparing for events mentioned in other chats or anticipating style changes when writing to a colleague versus a public business presentation unless asked for mid -converts.

Furthermore, if there are problems with this approach to memory, Anthropics roll -out strategy will allow the company to correct any errors before it becomes widely available to all Claude users. It will also be worth seeing if building long-term context like Chatgpt and Gemini will be more appealing or counteracting users compared to Claude’s way of making memory an on-demand aspect by using Ai-Chatboten.

And it assumes it works perfectly. Retrieving depends on Claude’s ability to surface the right excerpts, not just the latest or longest chat. If the summary is unclear, or the context is wrong, you may end up being more confused than before. And while the friction of having to ask Claude to use its memory must be an advantage, it still means that you will have to remember that the feature exists that some may seem to be annoying. Still, if anthropic is right, a small limit is a good thing, not a limitation. And users will be happy that Claude remembers it, and nothing else, without a request.