- AI Chatbots and Videos Use a HUGE QUART OF ENERGY AND WATER

- A five-second AI-Video uses as much energy as a microwave oven running for an hour or more

- Using data center energy has doubled since 2017 and AI will explain half of it in 2028

It only takes a few minutes in a microwave to explode a potato that you haven’t ventilated, but it takes as much energy as running that microwave for over an hour and more than a dozen potato explosions to an AI model to make a five-second video of a potato explosion.

A new study from My Technology Review has posted where hungry AI models are for energy. A basic chatbot response may use as little as 114 or as much as 6,700 Joules, between half a second and eight seconds, in a standard microwave oven, but it is when things become multimodal that the energy costs skyrocket to an hour plus in the microwave or 3.4 million joules.

It is not a new revelation that AI is energy -intensive, but Mit’s work establishes mathematics in sharp terms. The researchers devised what could be a typical session with an AI-Chatbot where you ask 15 questions, request 10 AI-generated images and throw requests for three different five-second videos.

You can see a realistic fantasy movie scene that seems to be filmed in your backyard a minute after asking for it, but you will not notice the huge amount of electricity you have required to produce it. You have requested approx. 2.9 kilowatt-hours or three and a half hours of microwave time.

What makes AI costs stand out is how painless it feels from the user’s perspective. You do not budget AI messages that we all did with our text messages 20 years ago.

AI Energy reconsider

Of course, you don’t mines Bitcoin and your video has at least some value of the real world, but it’s a really low bar to step over when it comes to ethical energy consumption. The increase in energy needs from data centers also happens at a ridiculous pace.

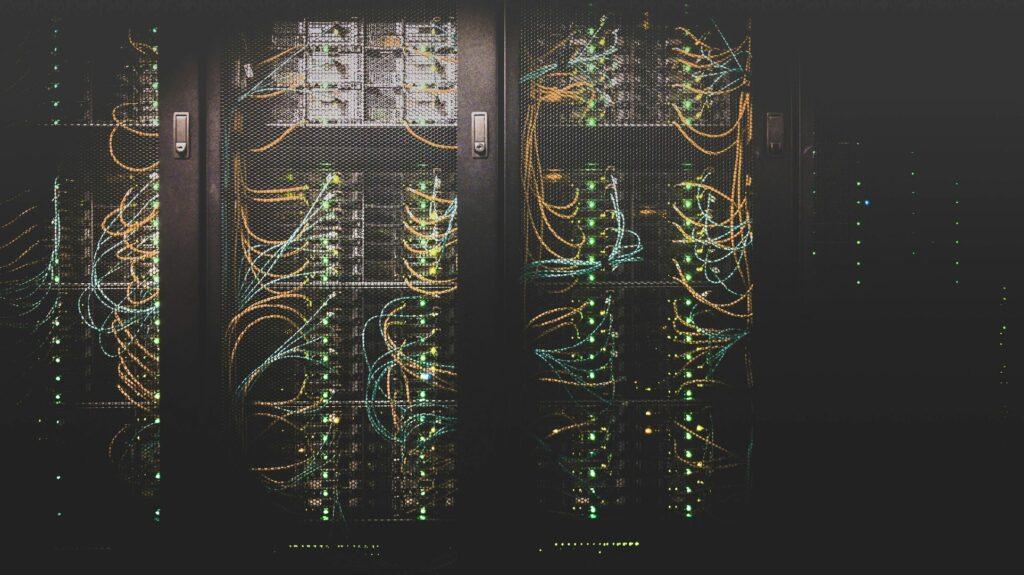

Data centers had the plateau in their energy consumption before the recent AI explosion thanks to efficiency gains. However, the energy consumed by data centers has doubled since 2017, and about half of it will be for AI in 2028, according to the report.

This is not a guilt, by the way. I may require professional requirements for some of my AI use, but I have hired it for all kinds of recreational fun and to help with personal tasks. I would write an apology note for the people working on data centers, but I would need AI to translate it into the language spoken in some of the data center’s locations. And I don’t want to sound heated, or at least not as heated as the same servers sheep. Some of the largest data centers use millions of liters of water daily to remain frosty.

The developers behind the AI infrastructure understand what is happening. Some try to buy cleaner energy settings. Microsoft is looking to enter into an agreement with nuclear power plants. AI may not be integrated into our future, but I would like it if that future is not full of extension cords and boiling rivers.

At individual level, your use or avoidance of AI will not make much of a difference, but to encourage better energy solutions from data centers owners. The most optimistic result is to develop more energy efficient chips, better cooling systems and greener energy sources. And maybe AIS Carbon Print should be discussed like any other energy infrastructure, such as transport or food systems. If we are willing to debate the sustainability of almond milk, we can certainly spare a thought for the 3.4 million Joules it takes to make a five-second video of a dancing cartoon almond.

As tools like Chatgpt, Gemini and Claude become smarter, faster and more embedded in our lives, the pressure on energy infrastructure will only grow. If this growth occurs without planning, we will be trying to cool a supercomputer with a paper fan while we chew on a raw potato.