- BROK -TALLS ATTENTIONS SHARE BY USERS HAS BEEN INCLUDED BY GOOGLE

- The interactions, no matter how private, became searchable of someone online

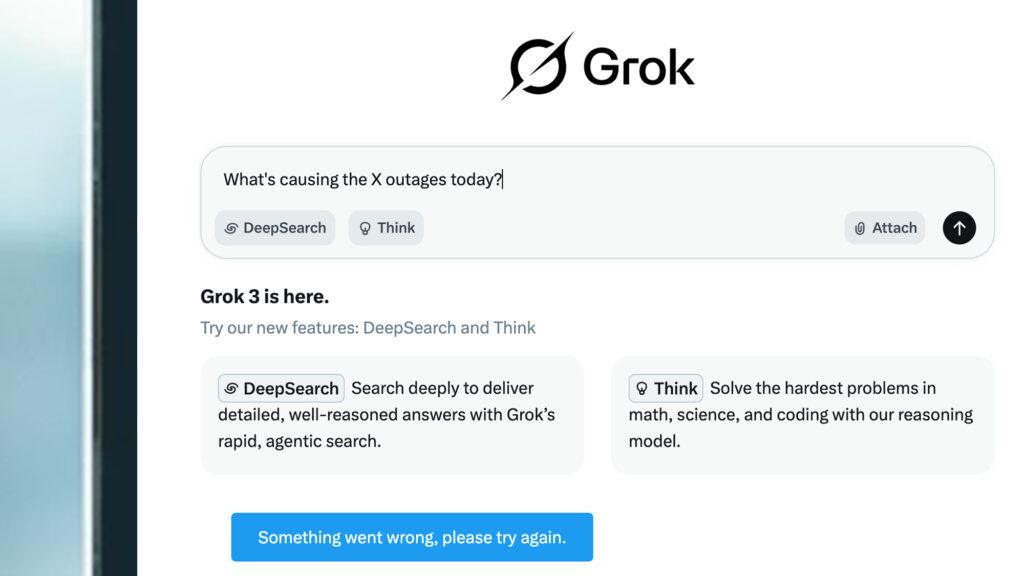

- The problem arose because Grok’s Sharing button did not add Noindex tags to prevent the discovery of search engines

If you have spent time talking with housing, your conversations may be visible with a simple Google search, as first revealed in a report from Forbes. More than 370,000 Grok -Chats were indexed and searchable on Google without users’ knowledge or permission as they used Groks Share button.

The unique URL created by the button did not mark the page as something for Google to ignore, making it publicly visible with a little effort.

Passwords, private health issues and relationship drama fill out the conversations that are now publicly available. Even more worrying questions about healing about getting drugs and planning murders are also displayed. GROK -prints are technically anonymized, but if there are identifiers, people could find out who is raising the small complaints or criminal schemes. This is not exactly the kind of topics you want to have tied to your name.

Unlike a screenshot or a private message, these links have no built -in outlet or access control. When they are live they are live. It is more than a technical error; It makes it difficult to trust AI. If people use AI -Chatbots like Ersatz therapy or romantic role -playing games, they won’t have what the conversation is delicious. Finding your deepest thoughts along with recipe blogs in search results may lead you away from the technology forever.

No privacy with AI -Chats

So how do you protect yourself? First, stop using the “Share” feature unless you are completely comfortable with the conversation that becomes public. If you have already shared a chat and regret it, try to find the link again and request removal from Google using their content removal tool. But it is a cumbersome process and there is no guarantee that it will disappear immediately.

If you are talking to the GROK through the X platform, you must also adjust your privacy settings. If you disable your posts used to train the model, you may have more protection. It is less certain, but the urgency of implementing AI products has made a lot of privacy protection of privacy than you might think.

If this problem sounds familiar, it’s because it’s only the latest example of AI Chatbot platforms that fumble users’ privacy while encouraging individual sharing of conversations. Openai recently had to go back an “experiment” in which shared chatgpt conversations began to emerge in Google results. Meta was facing its own setback this summer when people found that their discussions with Meta Ai Chatbot could appear in the app’s Discover Feed.

Conversations with chatbots can read more as diary posts than as social media posts. And if an app’s standard behavior turns them into searchable content, users will push back, at least until next time. As with Gmail ads scanning your inbox or Facebook apps scraping your friends list, the impulse is always to apologize for a violation of privacy.

The best case is to go and other patches this quickly. But AI -Chatbot users should probably assume that everything that was shared could be read by someone else in the end. As with so many other supposedly private digital spaces, there are many more holes than anyone can see. And maybe not treat hells like a reliable therapist.